The OpenAI Platform Consolidation Pattern: Learning From Microsoft, AWS, and Meta

OpenAI isn't doing anything new—they're running the exact playbook that destroyed independent software companies three times before. The only difference is speed.

Andrew Hatfield

November 17, 2025

The Pattern You've Seen Before (Even If You Don't Remember It)

Your API bills grew 40% last quarter. OpenAI just announced features that look suspiciously like your top use cases. Your CTO insists "we can always switch providers" while your codebase has GPT-4 specifics hardcoded throughout.

This feels new because AI is new. But the pattern is old.

I've watched platform providers destroy independent software companies using this exact playbook three times over 30 years—Microsoft's embrace/extend/extinguish in the 1990s, Google's observe/control/monetize through search and analytics in the 2000s, AWS's offer/watch/copy in the 2010s. Now OpenAI is running it again.

If you weren't selling software during those cycles, you didn't watch Netscape, Novell, or thousands of AWS marketplace vendors get obsoleted. But pattern recognition matters more than current performance.

The companies that survived previous cycles didn't optimize their platform integration faster. They recognized the architectural vulnerability early and transformed before the squeeze became inescapable. The companies that didn't became case studies in what not to do.

The Three-Phase Pattern That Repeats Every Cycle

Platform consolidation follows a predictable sequence regardless of the technology involved. Understanding this pattern reveals why your current AI strategy might be training your replacement.

Phase 1: Attract (Offer Below-Market Value)

Platform providers start by making their infrastructure irresistibly cheap or free, creating rapid adoption before competitors can establish alternatives.

Microsoft (1990s): Free developer tools, comprehensive MSDN documentation, and Windows integration made building for Windows the obvious choice. The platform appeared open—thousands of documented APIs for developers to build applications that ran on the dominant desktop OS.

Google (2000s): Free search, Google Analytics, Gmail, Google Maps APIs, and Chrome gave millions of businesses critical infrastructure while appearing open and developer-friendly. The value proposition was irresistible—world-class tools at zero cost, with straightforward integration and comprehensive documentation.

AWS (2010s): Below-cost infrastructure pricing—EC2 instances, S3 storage, RDS databases—made cloud migration economically rational for most companies. AWS Marketplace offered independent software vendors distribution and customer access in exchange for revenue share and usage visibility. The infrastructure was genuinely valuable, and the marketplace appeared to be a win-win partnership.

Meta (2010s-20s): Platform distribution and API access let developers build on Facebook's network while Meta monitored usage patterns to identify acquisition targets—this is how they spotted WhatsApp's growth trajectory before acquiring them.

OpenAI (2024-25): $0.25 per million input tokens for GPT-5 mini (their most commonly used production model) makes API integration faster and cheaper than building proprietary alternatives. This pricing is deliberately subsidized—OpenAI currently spends $1.69 for every dollar of revenue, burning billions annually to build market share before competitors can establish alternatives (Fortune, November 2025). The below-cost pricing accelerates dependency while OpenAI builds infrastructure capacity.

The below-market pricing isn't altruism. It's customer acquisition strategy designed to create dependency before competitive alternatives mature.

Phase 2: Learn (Observe Usage Patterns)

Once adoption reaches critical mass, platform providers monitor how customers use their infrastructure to identify high-value opportunities.

Microsoft: Customer support call patterns revealed which applications users struggled with most. MSDN documentation access showed which APIs developers used most frequently. Public revenue data showed which software categories generated the most value. This intelligence informed which features to integrate natively into Windows and Office—word processing, spreadsheets, email, browsers.

Google: Search queries revealed which topics and questions users cared about most. Analytics data showed which business models generated the most traffic and revenue. Chrome browser telemetry revealed which web applications users engaged with daily. Map API usage patterns showed which location-based services had traction. This intelligence guided product development—Google Shopping for e-commerce, local business features for reviews and recommendations, Gmail for email, Google Docs for productivity.

AWS: Marketplace metrics revealed which categories had the strongest customer demand and highest willingness to pay. CloudWatch monitoring showed which services customers used most heavily. Support ticket patterns revealed which features customers needed most urgently. Customer deployment architectures showed which third-party services were most critical to workflows. This intelligence informed which managed services to build natively—databases (RDS), caching (ElastiCache), search (OpenSearch), container orchestration (ECS, EKS).

Meta: API monitoring identified which third-party services were growing fastest. They used this intelligence to acquire WhatsApp before it became a direct threat. They attempted the same with Giphy in 2020, offering API access while monitoring competitor usage patterns. The UK's Competition and Markets Authority forced Meta to divest Giphy in 2021 after finding Meta could "require rivals like TikTok, Twitter and Snapchat to provide it with more user data in order to access Giphy GIFs"—using API access as leverage for competitive intelligence extraction (UK CMA, November 2021).

OpenAI: Every API call reveals workflow logic, competitive intelligence, and implementation patterns. Usage data shows which categories generate the most revenue and which features customers value most.

The intelligence gathering extends beyond OpenAI's direct observation. Their vendor ecosystem also collects data about you. When Mixpanel—OpenAI's frontend analytics provider—was breached in November 2025, attackers gained names, emails, and organization IDs of API customers. You're not just teaching OpenAI your workflows. You're exposing your team's identity and technical environment to every vendor in their supply chain—vendors you've never heard of and can't evaluate.

This isn't passive observation. It's active market research funded by your API spend.

The learning gets more precise when financial signals are added. Agent-native payment infrastructure like x402 reveals not just what workflows are popular, but what they're worth—accelerating the prioritization of which capabilities to build natively.

Phase 3: Obsolete (Build Native Competing Features)

After learning which use cases create the most value, platform providers build native alternatives with structural advantages independent developers can't match.

Microsoft: Internet Explorer bundled natively in Windows destroyed Netscape within three years. Office integration obsoleted WordPerfect and Lotus 1-2-3. The competitive advantage wasn't just bundling—Microsoft applications used undocumented Windows APIs that provided better performance and stability than competitors building on publicly documented interfaces. When WordPerfect or Lotus hit OS-level "bugs," Microsoft Word and Excel (with internal knowledge) worked perfectly.

Google: Native shopping results marginalized comparison shopping sites like Nextag and Shopzilla. Direct answers in search eliminated traffic to weather sites, calculator tools, and dictionary services. Local business features replaced vertical review sites like Yelp and TripAdvisor in search prominence. The competitive advantage was algorithmic control—Google could adjust search rankings to favor their own properties while claiming neutrality in serving "the best results." Content publishers dependent on Google search traffic saw referrals decline 60%+ after algorithm updates prioritized Google-owned properties.

AWS: RDS (managed relational databases) competed directly with MongoDB and other database-as-a-service marketplace vendors. ElastiCache replaced Redis Labs' managed caching revenue. OpenSearch competed with Elasticsearch after years of learning usage patterns through the marketplace. The competitive advantage was structural—AWS-native services had built-in integration with IAM, CloudWatch, VPC, and other AWS infrastructure that third-party services couldn't match. Plus preferential placement in console navigation, documentation, and cost optimization recommendations. Independent vendors either accepted lower margins and reduced control, pivoted to enterprise features AWS wouldn't build, or got acquired at fractions of their potential value.

Meta: Copied Snapchat Stories after monitoring engagement patterns. Acquired Instagram and WhatsApp before they could build competing social graphs. Attempted to acquire Giphy to control GIF distribution across competing platforms.

OpenAI: ChatGPT Plugins evolved into GPTs, then Custom GPTs, and now Operator—each iteration replacing API use cases with native features. But OpenAI's obsolescence pattern is fundamentally different. Previous platforms replaced infrastructure and application layers—Microsoft obsoleted productivity apps, AWS obsoleted database hosting. OpenAI is obsoleting entire business categories.

Company Knowledge doesn't just access your tools—it learns how your business makes decisions across Slack, Google Docs, HubSpot, and GitHub. Atlas doesn't just browse the web—it observes and learns every workflow. AgentBuilder doesn't just let you build automation—it captures the business logic that makes your category valuable, then uses that intelligence to build native replacements.

The job board initiative isn't about hiring. It's multi-vector business intelligence: hiring patterns reveal product roadmaps, compensation data exposes margin structure, role descriptions telegraph competitive positioning. Every data point across 500M+ annual hiring decisions teaches OpenAI how businesses scale, compete, and grow—then that intelligence feeds native features that replace the SaaS tools that help companies make those decisions.

OpenAI's $1.4 trillion in data center commitments over eight years creates computational advantages independent vendors can't match (TechCrunch, November 2025)—when they build native competing features, they can offer better performance at lower prices because they own the infrastructure end-to-end.

Here's how the pattern compresses with each cycle:

| Provider | Phase 1: Attract | Phase 2: Learn | Phase 3: Obsolete | Timeline |

|---|---|---|---|---|

| Microsoft (1990s) | Free dev tools | API usage patterns | Native IE/Office integration | 5-7 years |

| Google (2000s) | Free search/analytics | User behavior data | Native shopping/reviews | 4-6 years |

| AWS (2010s) | Below-cost infrastructure | Marketplace metrics | RDS/ElastiCache/OpenSearch | 3-5 years |

| Meta (2010s-20s) | Platform distribution | API monitoring for acquisitions | Copy (Stories) or Acquire (Instagram, WhatsApp) | 18-24 months |

| OpenAI (2024-25) | $0.25/M tokens APIs | Workflow intelligence via API calls | Plugins → GPTs → Operator → Category replacement | 12-18 months |

The timeline compression isn't accidental. Each cycle builds on infrastructure from the previous one, accelerating the path from attraction to obsolescence.

Why Each Cycle Compresses (And Why AI Is Fastest)

Technology doesn't just "move faster" in some vague sense. Specific structural changes enable each consolidation cycle to compress timelines further than the last.

1. Capital Efficiency Improves Exponentially

Microsoft needed years to build distribution channels—physical software, retail partnerships, enterprise sales teams. AWS needed global datacenters before offering cloud services. OpenAI rents compute from Microsoft and scales instantly. What required billions in infrastructure investment now requires API keys.

This capital efficiency means platform providers can enter new categories without the build-out time that previously slowed consolidation.

2. Developer Velocity Accelerates

DevOps practices, cloud infrastructure, containerization, and now AI coding assistants have compressed development timelines by 10-30x. What took six months to build in 2010 takes six weeks today with Cursor and Lovable.

This velocity works both ways—it helps you ship features faster, but it helps platform providers ship competing features even faster since they have more resources and better infrastructure.

3. Market Sophistication Increases

Enterprise buyers adopted cloud infrastructure over five years (2010-2015). They adopted AI capabilities in 18 months (2023-2024). Each technology wave trains buyers to adopt faster, reducing the time platform providers need to establish market dominance.

Faster adoption means faster learning for platform providers, which means faster competitive feature development.

4. Network Effects Compound

Every API call teaches OpenAI's models. Every customer makes them smarter. Every workflow adds training data. Improvement velocity isn't linear—it's exponential. The more you use their APIs, the better their competing features become.

This creates a perverse incentive structure: Your success directly funds and informs their ability to compete with you.

5. API Monitoring Enables Real-Time Pattern Recognition

Platform providers don't need to wait for market signals anymore—they watch API usage in real-time to identify opportunities.

Meta didn't guess that WhatsApp would be valuable. They monitored API usage patterns showing exponential messaging growth and acquired them before the threat materialized. They attempted the same playbook with Giphy, watching how competitors used GIF APIs to distribute content. Only regulatory intervention stopped that consolidation.

This isn't speculation—the UK CMA's forced divestiture order documented Meta's strategy: "Facebook terminated Giphy's advertising services at the time of the merger, removing an important source of potential competition."

When regulators force reversals of platform acquisitions built on API monitoring, it validates the architectural risk. Your API dependencies aren't just technical—they're strategic intelligence channels teaching platform providers which categories to enter next.

This pattern is standard practice in tech M&A. Large companies routinely make strategic investments in startups, take board seats or observer rights, monitor metrics and market traction, then decide whether to acquire or copy. I've worked for companies on both sides of this dynamic. It's how platform consolidation operates.

Timeline evidence shows the compression:

| Company | Cycle Duration | Visual |

|---|---|---|

| Microsoft (1990s) | 5-7 years | ████████████████ |

| Google (2000s) | 4-6 years | ████████████ |

| AWS (2010s) | 3-5 years | ██████████ |

| Meta (2010s-20s) | 18-24 months | █████ |

| OpenAI (2024-25) | 12-18 months | ███ |

Each cycle cuts the timeline by roughly half. If the pattern holds, you have 12-18 months to architect for independence before it's too late.

The Dependency Blindness Phenomenon

There's a psychological pattern that repeats across every platform consolidation cycle. Companies with the highest dependency consistently assess their strategic risk as lowest.

Microsoft era (1990s):

"We add value on top of Windows—our application layer is the moat."

Google era (2000s):

"We aggregate public information Google can't replicate."

AWS era (2010s):

"Our IP is in the application logic, not the infrastructure."

OpenAI era (2024-25):

"I personally am not worried about data leaking to the models."

That last quote comes directly from a CTO in our September 2025 survey of product and engineering leaders at B2B SaaS companies. The survey revealed a striking pattern: 83% report high dependency on proprietary foundation model APIs for core product features, yet most assess this dependency as moderate or low strategic risk.

This is textbook dependency blindness—when everyone has high dependency, it feels safe. When current differentiation seems durable, competitive vulnerability feels distant. When the platform provider isn't competing yet, strategic risk feels manageable.

Until they are.

Microsoft didn't compete with Netscape until they bundled Internet Explorer. Google didn't compete with review sites until they launched native local business features. AWS didn't compete with MongoDB until they launched DocumentDB. Meta didn't try to control GIF distribution until they acquired Giphy.

OpenAI doesn't compete with your SaaS product—until they do.

The companies saying "we're not worried" in 2025 sound identical to the companies saying the same thing in 1997, 2007, and 2014. The ones who recognized the pattern early survived. The ones who didn't became acquisition targets or case studies. Meanwhile, nations are spending billions to avoid the dependency these companies dismiss as manageable—France committed €109 billion specifically because they analyzed what happens when critical infrastructure is controlled by external parties.

The dependency blindness extends to model selection itself. Product leaders debate GPT-5 versus Opus-4.5 versus Gemini-3 as if picking the "best" model creates strategic advantage. It doesn't. Model capabilities converge. Pricing commoditizes. The company that spent six months optimizing for one model's specific strengths finds those strengths replicated in competitors' offerings within quarters. The model is commodity infrastructure. Your data, your decision logic, your learning loops—that's what creates durable advantage.

From Application Obsolescence to Category Liquidation

Previous platform cycles destroyed applications and infrastructure. OpenAI is destroying categories.

When AWS launched RDS, they obsoleted your database hosting—but you still owned your database schema, your business logic, your competitive advantage. When Microsoft bundled Office, they obsoleted your productivity tools—but your workflows, your data, your decision-making stayed yours.

OpenAI's pattern is different. They're not just replacing how you deliver software. They're learning the business intelligence that makes your category valuable, then using that intelligence to own the decisions your customers need.

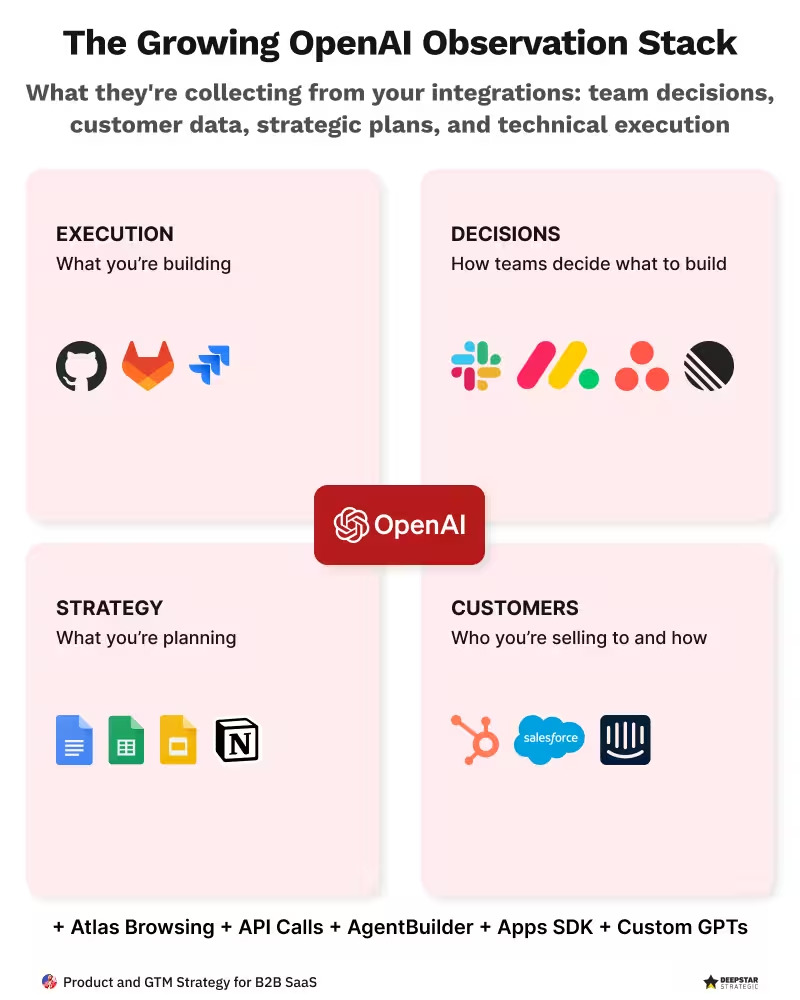

The Growing OpenAI Observation Stack

The observation stack reveals the systematic intelligence gathering:

- Execution layer (GitHub, GitLab, Jira): What you're building

- Decision layer (Slack, Monday, Asana): How teams decide what to build

- Strategy layer (Google Docs, Notion, Slides): What you're planning

- Customer layer (HubSpot, Salesforce, Intercom): Who you're selling to and how

Combined with Atlas browsing, API usage patterns, AgentBuilder workflows, and custom GPT implementations, OpenAI sees your complete business model—then builds the intelligence to make your entire category obsolete.

Controlling Cognition vs Controlling Information

Google controlled information. OpenAI controls cognition.

For 20 years, Google built the most powerful control stack in history—Search saw every query, Chrome saw every click, Analytics saw every visitor, Ads monetized every impression. That was information power.

OpenAI's Atlas doesn't just observe the web—it learns how people think, decide, and act. The shift looks small (just another browser), but it's massive:

| Level | OpenAI Atlas | Outcome | |

|---|---|---|---|

| 1. Observation | Crawled the web, captured queries | Observes workflows across every website | Context capture |

| 2. Aggregation | PageRank + ad algorithms | Atlas + memory + model feedback | Cross-context intelligence |

| 3. Intermediation | Search results and ad auctions | Agents acting directly in your browser | Behavior influence |

| 4. Replacement | Killed aggregators via zero-click results | Automates workflows, replaces SaaS functions | Workflow ownership |

Google told you what to click. OpenAI decides what you do next. That's the difference between search engines and decision engines.

The Business Model Transformation

Previous cycles took years and attacked adjacent layers. This cycle is measured in months and attacks your core value proposition. (Brian Balfour's analysis)

Old SaaS model:

- Vendors provide tools

- You own the decision

- You control the outcome

New AI platform model:

- AI provides recommendations

- Platform owns the decision logic

- You rent the outcome

This is the shift from workflow optimization to decision intelligence ownership. And every SaaS company is training their own replacement.

When a customer service SaaS company integrates Company Knowledge and builds agents in AgentBuilder, they're not just adding AI features. They're teaching OpenAI:

- How customer service workflows operate

- Which decision trees create the most value

- What business logic drives retention

- How to automate their entire category

Within 18 months, OpenAI will understand that business better than the company does. Then they'll use that intelligence to replace it at 1/10th the cost.

The Economics That Make Obsolescence Inevitable

Platform providers don't move to Phase 3 (Obsolete) out of malice. They do it because the economics are irresistible.

OpenAI's Current Revenue Model Shift

OpenAI's revenue comes from two sources:

- API usage: A growing but smaller portion of total revenue from developers building on their infrastructure

- Product subscriptions: The majority of revenue from ChatGPT Plus, Team, and Enterprise users—reaching $12-13 billion in annualized revenue by mid-2025, up from $10 billion in June 2025 and $5.5 billion in December 2024

The API business teaches them which workflows create the most value. The product business captures that value directly with better margins, distribution advantages, and zero customer acquisition cost.

The platform provider's calculation:

Your $50,000 monthly API spend teaches them a workflow worth potentially millions annually in subscription revenue if they build it natively. They have pricing power (bundled with ChatGPT), distribution advantages (700+ million weekly active users as of August 2025, CNBC), and structural cost advantages (no revenue share with themselves).

You funded their market research. They capture the value.

This economic dynamic drove every previous platform consolidation:

- Netscape's browser market share → 0% within three years of IE bundling

- Content publishers' Google traffic → down 60%+ after algorithm changes prioritized Google properties

- AWS marketplace vendors → acquired or pivoted after AWS launched competing services with built-in integration advantages

The Inverted Economics of AI Platform Dependency

Previous platform cycles had questionable value exchange. AI cycles have inverted economics entirely.

| Platform Era | You Paid For | They Learned | Economic Exchange |

|---|---|---|---|

| Microsoft (1990s) | Windows licenses | Support calls, MSDN usage, public revenue | You got OS, they got insights |

| Google (2000s) | "Free" (privacy trade) | What content you created | You got traffic, they got data |

| AWS (2010s) | Infrastructure services | What you built on their platform | You got compute, they got usage patterns |

| Strategic Investment | Capital infusion | What products work, market validation | You got funding, they got acquisition option OR competitive intelligence |

| OpenAI (2024-25) | Direct API costs | Your workflows, logic, competitive intelligence | You pay them to learn how to replace you |

The difference is brutal. You're not getting infrastructure while they incidentally learn. You're funding their R&D to build your competitor.

Every dollar you spend on OpenAI APIs:

- Teaches them a workflow pattern worth potentially $100+ in native feature revenue

- Provides market validation for which categories to target

- Funds their compute infrastructure to build competing features

- Creates switching costs that lock you into their ecosystem

This isn't even a new pattern—it's the strategic investment playbook at scale. Large companies routinely invest in startups to watch what succeeds, then decide whether to acquire or copy. The pattern: Corporate VC makes strategic investment, gets board visibility, monitors traction, then acquires if strategic or copies if economical.

What makes AI different: With traditional strategic investments, the startup got capital to grow. With AI APIs, you pay the platform provider while they gather the intelligence to compete with you. The economics are completely inverted.

High Alpha's 2025 SaaS Benchmarks quantifies this platform tax: Companies with AI "core to their product" show 75% gross margins compared to 80% for those with AI as "supporting features." That 5 percentage point compression equals approximately $1.5M annually for a $30M ARR company—money flowing directly to platform providers while they learn how to obsolete you.

The data gets worse: 42% of companies reduced engineering headcount due to AI productivity gains, yet gross margins declined 1% overall. The labor savings moved to platform providers through API costs. You traded controllable costs (salaries) for variable costs that scale with your success (API usage) and fund competitive feature development.

Who Survives Platform Consolidation (The Architectural Moves)

The pattern is predictable. The outcome isn't predetermined. Companies that architect for platform independence survive. Companies that optimize platform integration get obsoleted.

Here are the architectural moves that created independence in previous cycles and work for AI today.

Architectural Move #1: Multi-Tier Model Selection

Companies that survive architect for platform optionality, not optimization. This means strategic choices about which intelligence stays proprietary versus what's delegated to APIs.

The mental model shift matters: treat models as a portfolio you manage, not a partner you depend on. Stop treating your best model as your core bet. Assume you need to swap models quarterly as capabilities shift and pricing changes. The companies building durable advantages aren't optimizing for any single model's capabilities—they're architecting for model fluidity while investing in the data and logic layers that create actual differentiation.

| Use Case | Model Tier | Rationale | Example |

|---|---|---|---|

| Commodity workflows | Open source (Llama 4, Mistral) | Cost efficiency, no competitive intelligence exposure | Document summarization, content generation |

| Proprietary workflows | Self-hosted fine-tuned models | Competitive intelligence protection | Decision logic, proprietary data analysis |

| Strategic capabilities | Selective proprietary API usage | Performance where needed, controlled exposure | Complex reasoning, multimodal analysis |

Who did this successfully: Snowflake architected for multi-cloud from day one (runs on AWS, GCP, Azure). MongoDB offered managed service but maintained open-source core and cross-platform capability. Both survived by avoiding single-platform optimization.

Who didn't: Pure AWS marketplace vendors that built exclusively on AWS-specific features got obsoleted when AWS launched competing native services. The ones without platform portability had no escape path.

Beyond general-purpose models, domain-specific small language models (SLMs) offer additional options for specialized workflows—medical, legal, financial, and industry-specific applications where proprietary fine-tuning on domain data creates competitive advantages platform providers can't easily replicate.

Open-source models like Llama 4 and Mistral provide alternatives to proprietary APIs, available either through self-hosting (which requires infrastructure and operations investment) or through hosted providers like Meta's Llama API, Groq, and Together.ai. The choice isn't purely economic—it's strategic. Proprietary APIs optimize for velocity and convenience. Self-hosted or open-source-based architectures optimize for platform independence and competitive intelligence protection.

The current economics make this even more critical.

Architectural Move #2: Proprietary Data Moats

Intelligence that compounds with usage creates defensibility APIs can't replicate.

Platform providers have foundation models. What they don't have is your proprietary data:

Zero-party data: Customer feedback, explicit preferences, stated goals, documented decisions

First-party data: Product usage patterns, feature adoption, workflow completion rates, outcome tracking

Second-party data: Sales intelligence, customer success insights, account expansion signals

Third-party data: Market intelligence, competitive benchmarks, industry-specific context

Connected in unified intelligence loops, these data sources create decision-making systems that improve continuously through customer usage. The switching cost isn't workflow familiarity—it's intelligence quality degradation.

One CPO at a supply chain SaaS company explained their competitive moat:

Customers occasionally explore building their own forecasting capabilities, but conversations end quickly once they realize they'd need years of proprietary supply chain data and domain-specific model training to match current decision accuracy.

That's decision intelligence protection. The complexity isn't accidental—it's the moat.

Architectural Move #3: Decision Ownership Over Workflow Optimization

The value proposition shift matters more than most product leaders realize.

| Dimension | Workflow Optimization | Decision Intelligence |

|---|---|---|

| Primary Value | Helps users work faster | Makes decisions users trust |

| Intelligence Source | Shared foundation models | Proprietary data + models |

| Replication Barrier | Workflow understanding | Data access + model training |

| Platform Dependency | High (built on APIs) | Strategic only (selective usage) |

| Customer DIY Risk | High (logic is visible) | Low (intelligence is hidden) |

| Switching Cost | Change management | Intelligence quality gap |

Copilot features help users complete workflows faster. They're valuable, but they're vulnerable because they optimize shared infrastructure anyone can access.

Captain systems own decisions and deliver outcomes. They analyze context only you have, apply intelligence only you've built, make decisions users trust without verification, and improve continuously through proprietary learning loops.

The architectural difference determines whether platform consolidation makes you more vulnerable or more defensible.

The Transformation Window (12-18 Months)

Timeline compression isn't theoretical. It's mathematical.

Timeline evidence shows the compression:

| Company | Cycle Duration | Visual |

|---|---|---|

| Microsoft (1990s) | 5-7 years | ████████████████ |

| Google (2000s) | 4-6 years | ████████████ |

| AWS (2010s) | 3-5 years | ██████████ |

| Meta (2010s-20s) | 18-24 months | █████ |

| OpenAI (2024-25) | 12-18 months | ███ |

Each cycle cuts the timeline by roughly half. If the pattern holds, you have 12-18 months to architect for independence before it's too late.

Why 12-18 months specifically?

Model improvement velocity. GPT-3 launched June 2020. GPT-4 launched March 2023. GPT-5 launched early 2025. O1 reasoning models launched September 2024. Major capability improvements arrive every 6-12 months, with acceleration increasing.

Product shipping speed. ChatGPT Plugins launched March 2023. GPTs launched November 2023. Custom GPTs launched January 2024. Operator launched January 2025. Each iteration replaces API use cases with native features in roughly 6-month cycles.

Enterprise adoption acceleration. Cloud infrastructure took 5+ years for mainstream enterprise adoption (2010-2015). AI capabilities reached similar penetration in 18 months (2023-2024). Faster adoption means faster platform learning and faster competitive feature development.

The architectural decision calcification point

After 18-24 months of building on proprietary APIs, dependencies become too expensive to unwind. Your codebase, product architecture, customer expectations, and team skillsets all optimize around the platform. Migration becomes economically irrational.

The pattern from previous cycles proves this out:

Companies that transformed early—Snowflake architecting for multi-cloud before AWS lock-in became expensive, MongoDB maintaining open-source core while offering managed services—became category leaders worth billions.

Companies that waited until the threat was obvious—pure AWS marketplace vendors, Google-dependent content publishers, Microsoft-only productivity tools—got acquired at fractions of their potential value or shut down entirely.

The difference between those outcomes wasn't capability or resources. It was recognizing the pattern before everyone else and transforming while it was still economically feasible.

How Platform Dependency Compounds With Other Threats

Platform dependency doesn't exist in isolation. It's one of three compounding forces that accelerate together.

The Triple Squeeze shows how platform dependency creates feedback loops with AI-native competitive pressure and customer DIY capabilities:

AI-native startups force you to ship features faster to maintain competitive parity. Faster feature shipping increases your foundation model API dependencies because building on proprietary APIs is faster than architecting for platform independence. Those dependencies teach platform providers your workflows while giving customers confidence that the intelligence is commodity infrastructure they can access directly.

Customer DIY validates that workflows are simple enough to rebuild with the same AI tools you're using. Platform dependency ensures everyone—including your competitors and customers—has access to the same intelligence foundation.

The cycle compounds. Each squeeze accelerates the others.

Understanding the platform consolidation pattern is critical. But only if you see how it interacts with the other two threats creating an inescapable dynamic.

The Copilot vs. Captain Question

The most effective way to evaluate your platform dependency risk is to analyze whether your AI roadmap builds toward decision intelligence or optimizes workflows on shared infrastructure.

Most product teams haven't done this analysis. They're shipping based on customer requests, competitive parity pressure, and roadmap velocity without examining the architectural implications. Copilot feature after copilot feature, each one improving workflows while validating replicability to both platform providers and customers.

The strategic question isn't "are we shipping AI features fast enough?"

It's "are we building systems that create compounding advantages or compounding vulnerabilities?"

The architecture that creates compounding advantages requires three layers most teams skip: unified intelligence, decision engines with learning loops, and trust architecture that enables adoption.

We built an assessment that categorizes up to 20 AI features as either copilots (workflow optimization vulnerable to platform commoditization) or captains (decision systems requiring proprietary intelligence).

The ratio reveals your architectural trajectory. If your roadmap is 90% copilots, you're optimizing within the squeeze. If it's shifting toward captains built on proprietary data, you're architecting for independence.

Five minutes for strategic clarity. No email required.

Are You Building Copilots or Captains?Are You Building Copilots or Captains?

Assess whether your AI features optimize workflows anyone can copy or own decisions only you can make.

Pattern Recognition Matters More Than Current Performance

Platform providers have run this playbook four times. The companies that survived recognized the pattern early and transformed before the squeeze became inescapable. The companies that optimized faster within the doomed paradigm became case studies in what not to do.

Microsoft took 5-7 years to consolidate their ecosystem. AWS took 3-5 years. Meta compressed it to 18-24 months. OpenAI is running the same pattern in 12-18 months—and they're accelerating.

The difference between surviving and getting obsoleted isn't capability or resources. It's pattern recognition and architectural intent.

Your team can build AI features. The question is whether you're architecting those features to create independence or training your replacement through API dependencies that teach platform providers exactly how to compete with you.

Every previous platform consolidation cycle gave companies a window to recognize the pattern and transform. Most didn't see it until too late—until the platform provider was already shipping competing features, until migration costs were prohibitive, until the only options were acquisition or shutdown.

The transformation window for AI is 12-18 months. After that, architectural decisions calcify and unwinding platform dependencies becomes economically irrational.

Which side of the pattern will you be on?

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Constraint Nobody's Modeling

The State of SaaS in the Age of AIThe Constraint Nobody's ModelingEvery AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for Allies

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for AlliesOpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS Companies

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS CompaniesFrance, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

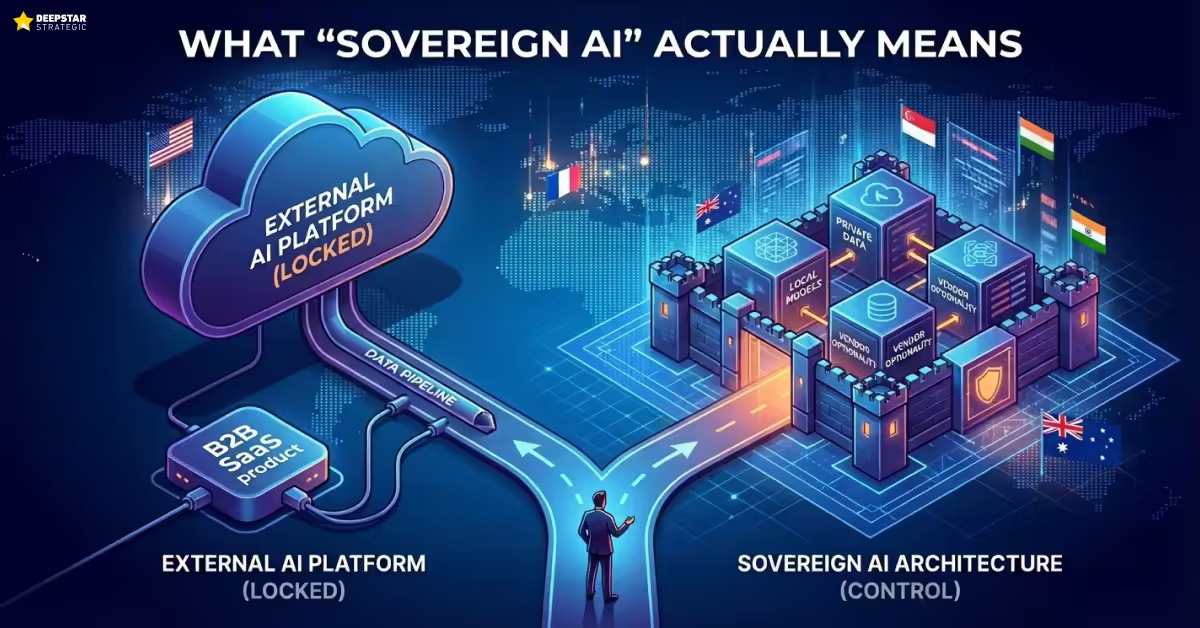

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product Strategy

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product StrategyFrance is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025