Building Decision Intelligence: The Architecture That Separates Winners From Case Studies

Most AI implementations fail at the architecture layer, not the model layer. Here's the three-layer framework that separates decision systems that compound from features that plateau.

Andrew Hatfield

December 4, 2025

The Architecture Gap Nobody Talks About

You've recognized the Triple Squeeze. You understand that transformation beats feature addition. You've even started planning your shift from workflow optimization to decision ownership.

Now comes the hard part: actually building it.

Most companies that attempt decision intelligence fail. Not because they lack understanding—they've read the frameworks, attended the conferences, hired the consultants. They fail because they build systems that can't learn, can't be trusted, and can't scale.

The MIT NANDA research found 95% of AI implementations deliver zero measurable ROI. That statistic deserves unpacking, because it's not about technology failure.

Emergence Capital's 2025 analysis makes the pattern concrete: 59% of companies have implemented GenAI, but satisfaction averages just 59 out of 100—essentially a failing grade two years after ChatGPT's launch. The 24-point gap between top-quartile performers (74) and bottom-quartile (50) reveals something important: implementation quality, not tool selection, determines success. Companies using identical models achieve dramatically different outcomes based on how they architect around those models.

Emergence Capital's root cause analysis is blunt: organizations are bolting AI onto existing processes rather than redesigning workflows around AI capabilities. They're treating AI as a feature layer instead of an architectural foundation.

The financial data confirms this pattern. ICONIQ's State of Software 2025 shows 80% of companies actively experimenting with AI tools—near-universal adoption. Yet ARR per employee improvements are coming from organizational restructuring and workforce changes, not AI-driven productivity gains. The promised 10x productivity multiplier isn't materializing because companies are optimizing the wrong layer.

Vendr's 2025 SaaS Trends data adds another dimension: despite widespread AI feature adoption, ACVs remained flat year-over-year. Buyers see AI capabilities as table stakes, not premium differentiation. The market has already adjusted its expectations—AI features don't command pricing power because they don't deliver differentiated value.

The pattern across all this data points to the same conclusion: companies select capable models, integrate them competently, ship features that technically work—and still fail to create measurable business value. The technology works. The architecture doesn't.

Here's the counterintuitive insight most teams miss: a weaker model with better access to your proprietary data outperforms a frontier model with generic access. Companies obsessing over which foundation model to use are optimizing the wrong variable. The model is commodity infrastructure. Your business logic, your customer data, your decision history—that's what creates compounding advantage.

The gap isn't capability. It's architecture.

I've watched 47 B2B SaaS companies attempt decision intelligence over the past 18 months. The pattern is consistent. Companies that treat decision intelligence as a feature set build systems that plateau within months. Companies that treat it as an architectural approach build advantages that compound for years.

The difference comes down to three layers most teams either skip or under-engineer. Get these wrong, and your decision system becomes another AI feature that impresses in demos but disappoints in production. Get them right, and you build the kind of moat that AI-native competitors already understand.

What Decision Intelligence Actually Means

Decision intelligence isn't better analytics. It isn't smarter recommendations. It isn't another dashboard with AI-generated insights.

Decision intelligence means decision ownership. Your product makes the decision, takes accountability for the outcome, and improves with every interaction.

The distinction matters more than most teams realize.

Copilots assist. They help users complete tasks faster. They surface relevant information, suggest next steps, automate repetitive work. Users still own every decision. The product optimizes the workflow; humans determine the outcome.

Captains own. They make decisions users trust without verification. They take action, deliver outcomes, and accept accountability for results. The product determines the outcome; humans validate exceptions.

| Dimension | Copilot | Captain |

|---|---|---|

| Core Function | Assists users with tasks | Makes decisions autonomously |

| Decision Ownership | User decides, AI suggests | AI decides, user validates exceptions |

| Value Proposition | "Do your job better" | "We do it for you" |

| Trust Requirement | Low—users verify everything | High—users act without checking |

| Architecture Needs | Good UX, reliable APIs | Proprietary data, learning loops, accountability |

| Improvement Pattern | Manual updates | Compounds with usage |

The architectural requirements are completely different. Copilots need good UX and reliable API integrations. Captains need proprietary data, learning loops, and accountability architecture that most teams have never built.

Recent failures at major consultancies illustrate what happens when organizations skip the trust and validation layer. Deloitte used AI to generate a $440,000 report for the Australian government that was riddled with hallucinations—fabricated citations, invented statistics, confident assertions about things that never happened. A partner exited; Deloitte refunded $290,000. Meanwhile, KPMG auditors were caught using AI to cheat on professional certification tests—a governance failure that undermined the firm's credibility on AI expertise entirely.

These aren't technology failures. The models worked exactly as designed. These are architecture failures—organizations deploying AI without the validation layer that catches hallucinations before they reach clients, without the governance frameworks that prevent misuse. When you skip the trust architecture, you don't build decision intelligence. You build liability.

The companies achieving 2-3x valuation advantages aren't building better recommendation engines. They're building systems that own decisions—with accuracy high enough that users act without second-guessing, and accountability architecture that makes AI decisions auditable when they matter.

That requires a specific architecture most teams skip.

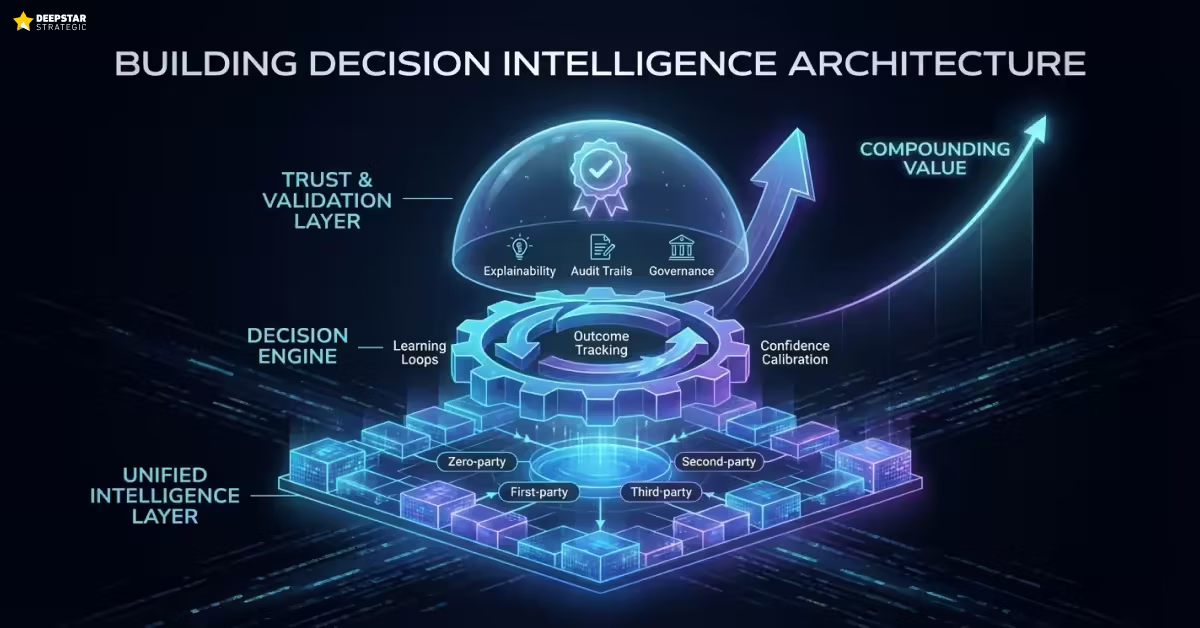

The Three-Layer Architecture

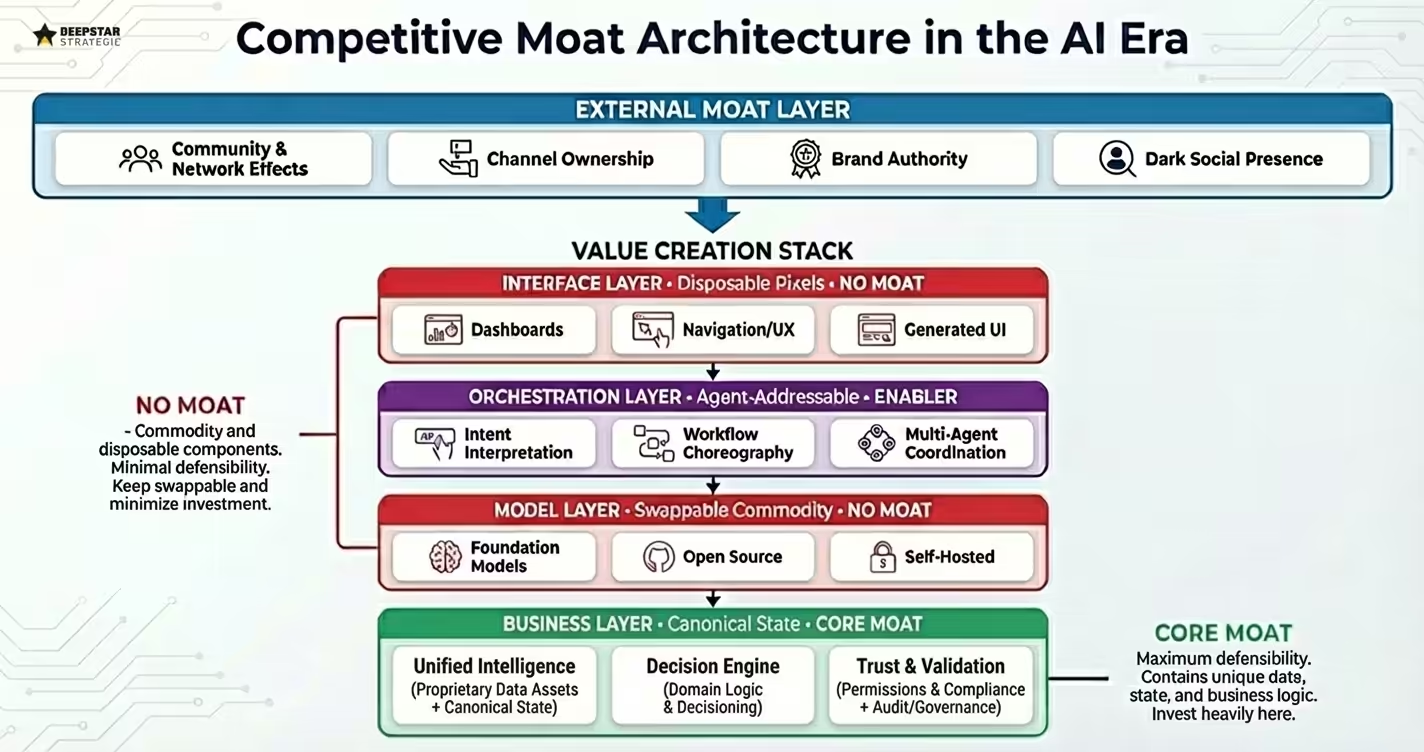

Before diving into the details, it helps to see where decision intelligence fits in the full competitive moat stack.

The graphic reveals a crucial insight: most of what SaaS companies invest in has no moat. Your interface layer—dashboards, navigation, generated UI—is disposable. Beautiful pixels don't defend you when agents can route around your screens entirely or when generative UI can recreate your layouts on demand. Your model layer is commodity infrastructure; foundation models are swappable, and treating "we use GPT-4" as differentiation is a category error.

The orchestration layer—intent interpretation, workflow choreography, multi-agent coordination—is an enabler, not a moat. It's necessary but not sufficient. Competitors can build equivalent orchestration.

Your moat lives in the business layer: canonical state, domain logic, proprietary data assets, and permissions. The three-layer architecture below is how you build a defensible business layer. It breaks down into unified intelligence (your proprietary data), decision engines (your domain logic), and trust architecture (your permissions and compliance). Everything else in the stack is either table stakes or actively replaceable.

Every decision system that actually works shares three architectural layers. Skip any one, and your system hits a ceiling. Underinvest in any one, and you build a feature instead of a moat.

Layer 1: Unified Intelligence Layer

This is where proprietary data becomes strategic asset.

Most SaaS companies have valuable data scattered across systems that never connect. Product usage telemetry lives in one warehouse. Customer feedback sits in support tickets and NPS surveys. Sales intelligence exists in CRM notes and call recordings. Market signals come from third-party providers nobody integrates.

The Unified Intelligence Layer connects four data sources into a single intelligence architecture:

| Data Type | Source | What It Captures | Why It Matters |

|---|---|---|---|

| Zero-party | Direct customer input | Preferences, goals, feedback from surveys and onboarding | Highest-signal data most companies underutilize |

| First-party | Product behavior | Usage patterns, feature adoption, workflow paths, abandonment points | Data platform providers learn from your API calls |

| Second-party | Relationship signals | Sales insights, CS health scores, support patterns, renewal indicators | Connects product usage to business outcomes |

| Third-party | Market context | Industry benchmarks, competitive moves, economic indicators | Makes the other three data types actionable |

Most companies treat these as separate data sources feeding separate systems. Marketing uses third-party data for targeting. Product uses first-party data for roadmap decisions. Sales uses second-party data for deal management. Nobody connects them.

The Unified Intelligence Layer creates a single source of truth that feeds both product decisions and go-to-market intelligence. When a customer's usage patterns shift, sales knows before the renewal conversation. When market conditions change, product roadmaps adapt before competitors react. When support tickets spike around a specific workflow, the decision engine learns without manual intervention.

This layer is your moat—but only if you architect it correctly. Competitors can copy your features. They can license the same foundation models. They can't replicate years of unified intelligence accumulation. This is your business layer: the canonical state, the decision history, the proprietary logic that makes your product irreplaceable. Nations are investing billions to build exactly this kind of proprietary infrastructure—France's €109 billion commitment isn't about having AI, it's about owning the intelligence layer that feeds it.

There's a second requirement most teams miss: this layer must be accessible to both humans and agents. As AI agents increasingly orchestrate workflows across multiple systems, the question shifts from "does this have the best dashboard?" to "is this the system easiest for agents to choreograph?" Companies building beautiful interfaces on top of inaccessible data will watch agents route around them entirely. Your business layer needs clean APIs, well-defined schemas, and state that external systems can query and update.

Layer 2: Decision Engine

This is where intelligence becomes action.

Most AI implementations use static rules. If usage drops below threshold X, trigger intervention Y. If customer matches profile A, recommend action B. Rules work until they don't—and they stop working faster than most teams expect.

Decision engines use learning loops instead of static rules. Every decision generates outcome data. Every outcome refines the next decision. The system gets smarter with every interaction, not just with every model update.

The architecture requires four components:

| Component | Function | Key Questions |

|---|---|---|

| Decision Logic | Reasoning framework that converts intelligence into action | What signals matter most? How are conflicts resolved? What confidence triggers autonomy vs. review? |

| Outcome Tracking | Feedback mechanism that captures decision results | Did the action achieve the outcome? How long did it take? What side effects emerged? |

| Confidence Calibration | Self-awareness that determines when to act vs. escalate | High-confidence → autonomous. Low-confidence → human judgment. Threshold shifts based on history. |

| Learning Loops | Improvement mechanism that compounds over time | Every decision-outcome pair trains the next decision. Pattern recognition improves. Accuracy compounds. |

Static rule systems plateau. You ship them, they work at a certain accuracy level, and improvement requires manual rule refinement. Decision engines compound. The gap between rule-based and learning-based systems widens every month.

This is why AI-native companies maintain structural advantages. They built learning loops from day one. Traditional SaaS companies retrofitting AI features onto workflow products don't have the feedback architecture to improve.

Layer 3: Trust & Validation Layer

This is where architecture meets adoption.

The most sophisticated decision engine fails if users don't trust it. Trust isn't a feeling—it's an architecture requirement.

| Component | Purpose | Impact on Adoption |

|---|---|---|

| Explainability | Users understand why the system made a specific decision | "Recommended based on similar customers who achieved X outcome" builds trust. Black boxes erode it. |

| Audit Trails | Traceable path from input data to output action | When decisions matter (contracts, pricing), stakeholders can verify reasoning. |

| Exception Handling | System recognizes its own limitations | Graceful degradation to human judgment preserves trust. Silent failures destroy it. |

| Governance Frameworks | Rules for overrides, approvals, escalations | Enables autonomous decision-making at scale without organizational chaos. |

Companies that skip the trust layer build impressive demos that fail in production. Users learn the system can't be trusted and route around it. Adoption stalls. The decision engine becomes another feature nobody uses.

Companies that invest in trust architecture build systems that get adopted, generate outcome data, and improve over time. The trust layer isn't optional—it's what separates production systems from proof-of-concepts.

| Layer | Function | Key Components | Common Failure Mode |

|---|---|---|---|

| Unified Intelligence | Data → Signal | Zero/first/second/third-party integration, single source of truth | Siloed data, no cross-functional intelligence |

| Decision Engine | Signal → Action | Learning loops, outcome tracking, confidence calibration | Static rules that can't improve |

| Trust & Validation | Action → Adoption | Explainability, audit trails, governance | Black-box decisions users ignore |

Implementation Patterns That Work

Architecture without execution is theory. Here's how the three layers translate to implementation decisions.

Model Selection for Decision Systems

Not every decision requires the same model architecture. The three-tier model selection strategy from the transformation framework applies directly:

| Tier | Strategy | When to Use | Platform Exposure |

|---|---|---|---|

| 1. Proprietary | Fine-tune models on your unified intelligence layer | Decisions that create competitive differentiation | None—this is your moat |

| 2. Open Source | Deploy Llama, Mistral, etc. on your infrastructure | Commodity capability where cost matters | None—you control everything |

| 3. Strategic API | OpenAI, Anthropic APIs for cutting-edge capability | Early experimentation, capabilities you can't build yet | High—trading intelligence for velocity |

Tier 1 examples: Supplier recommendations with 94% acceptance rates. Pricing optimization based on customer-specific patterns. Resource allocation that improves with every cycle.

Tier 2 examples: Classification tasks. Summarization. Routine analysis. You control the data, outputs, and learning.

Tier 3 examples: Early-stage experimentation. Specific use cases where value exceeds exposure risk.

The mistake most companies make is using Tier 3 for everything. It's fastest for initial implementation. It's most expensive strategically.

The Feedback Architecture

Learning loops require intentional design. Every decision must generate data that feeds improvement:

| Stage | What to Capture | Why It Matters |

|---|---|---|

| Input Capture | What information did the system use to make this decision? | Context matters for learning. Store with the decision, not separately. |

| Decision Recording | What did the system decide, and with what confidence? | Track not just the output but the reasoning path. |

| Outcome Measurement | What happened? Did the action achieve the intended result? | Timeframe and side effects matter as much as success/failure. |

| Learning Integration | How does this outcome update the decision logic? | What patterns does it reinforce or contradict? |

Most implementations capture decisions but not outcomes. They record what the system recommended but not whether it worked. Without outcome data, learning loops can't close. The system never improves.

Integration Complexity

The three-layer architecture requires integration most teams underestimate:

| Integration Type | What's Involved | The Hard Part |

|---|---|---|

| Data Integration | Connecting siloed systems into the unified intelligence layer | Technical work is straightforward; organizational work is hard |

| Workflow Integration | Embedding decision outputs into existing processes | Where do decisions surface? How do users interact? What happens after? |

| Feedback Integration | Capturing outcomes and routing them to the decision engine | Often requires instrumenting systems not designed for outcome tracking |

Companies that treat integration as a phase underestimate the ongoing work. The unified intelligence layer needs continuous attention. New data sources emerge. Existing sources change. Outcome tracking requires maintenance. Integration isn't a project—it's an operational capability.

The Captain System Checklist

Five questions reveal whether you're building a captain or another copilot:

| # | Question | What a "No" Means |

|---|---|---|

| 1 | Can your system make a decision without human approval for routine cases? | You've built a recommendation engine, not a decision system |

| 2 | Does every decision generate data that improves subsequent decisions? | You've built rules, not learning loops. Features plateau. |

| 3 | Can you trace any decision back to the data and logic that produced it? | Trust will limit adoption. Explainability enables buy-in. |

| 4 | Would users trust the decision enough to act without verification? | You've added a step to their workflow, not removed one |

| 5 | Does your decision accuracy improve measurably over time? | Your learning loops aren't working. Something is broken. |

| 6 | Can an AI agent access your system's decisions and data without using your UI? | You've built a dashboard, not a business layer. Agents will route around you. |

Score yourself honestly. Most companies building "AI features" score two or three out of six. Captain systems score five or six.

Building vs. Buying Decision Systems

The build-or-buy question for decision systems differs from typical software procurement.

Most AI vendors deliver copilots, not captains. Their business model depends on selling to many customers. Decision systems require proprietary data specific to your context. Vendors can sell you the engine, but they can't sell you the intelligence layer that makes it valuable.

Integration complexity exceeds implementation complexity. Buying a decision engine solves the smallest part of the problem. Connecting it to your data sources, embedding it in your workflows, building the feedback loops—that's where the work lives. Vendors who promise turnkey solutions are selling copilots labeled as captains.

What to evaluate in decision system partners:

Do they help you build your intelligence layer, or do they provide their own? Partners who build on your data create assets you own. Partners who provide their intelligence create dependencies you rent.

Do they architect for learning loops, or do they configure rules? Ask about outcome tracking, feedback integration, confidence calibration. If the answer sounds like rule configuration, you're buying a copilot.

Do they invest in trust architecture, or do they skip to capability? Explainability, audit trails, governance frameworks—these determine adoption. Vendors who rush past them deliver demos, not production systems.

When to build internally: You have the data, the engineering capacity, and decisions that create competitive differentiation. Building creates assets. Buying creates dependencies.

When to partner: You need acceleration, architectural guidance, or capabilities your team hasn't built before. The right partner transfers knowledge, not just delivers features.

When to buy: The decision is commodity—similar across companies, not a source of differentiation. Classification, summarization, routine analysis. Don't build what you can buy when it doesn't create competitive advantage.

| Approach | Best When | Creates | Watch Out For |

|---|---|---|---|

| Build | Competitive differentiation + data + engineering capacity | Assets you own | Time to value, opportunity cost |

| Partner | Need acceleration + knowledge transfer | Capabilities + internal learning | Partners who create dependency, not capability |

| Buy | Commodity decisions, no differentiation value | Speed to deployment | Vendors selling copilots labeled as captains |

The Path Forward

Decision intelligence isn't a feature you ship. It's an architectural capability you build over time.

The companies achieving 100% growth while traditional SaaS stalls at 23% didn't get there by shipping more AI features faster. They built decision architecture from day one. Their systems learn. Their advantages compound. Their moats widen every month.

You have a narrower window than they did. The Triple Squeeze compresses timelines. AI-native competitors ship decision systems while you're still debating copilot features. Customers explore DIY replacements while you optimize workflows they're ready to abandon. Platform providers learn your business through API calls while you build on their infrastructure.

The path forward isn't shipping faster. It's building differently.

The SaaS companies that survive the next cycle won't be the ones with the best dashboards or the most AI features. They'll be the ones that became essential business infrastructure—high-integrity services that both humans and agents rely on for canonical state, trusted decisions, and auditable outcomes. Your product becomes less about screens and more about being the authoritative source that everything else depends on.

Start with the intelligence layer. Audit your data sources. Identify what's siloed, what's missing, what's underutilized. The unified intelligence layer takes longest to build and creates the most defensible advantage.

Design for learning, not rules. Every decision system you build should improve over time. Architect the feedback loops before you architect the decision logic. Outcome tracking isn't optional.

Invest in trust architecture. Explainability and governance determine adoption velocity. Skip them and your decision system becomes another feature users route around.

Move in 90-day cycles. Pick one decision domain. Build the three-layer architecture for that domain. Prove value. Expand. Transformation happens incrementally, not in big-bang implementations.

The difference between companies that lead the next cycle and companies that become case studies isn't capability or resources. It's architectural intent applied early enough to compound.

Features don't defend. Decisions do.

What decision could your product own—not just enable—starting this quarter?

Ready to Build Decision Systems?Ready to Build Decision Systems?

Evaluate whether your AI roadmap builds toward decision ownership or optimizes workflows competitors can replicate.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into building decision intelligence systems

Learn how to architect decision systems that compound competitive advantages over time

Building Decision Intelligence SystemsFrom Team Member to CEO: Patrick Coghlan's Blueprint for Leadership and Market Growth

Building Decision Intelligence SystemsFrom Team Member to CEO: Patrick Coghlan's Blueprint for Leadership and Market GrowthHow integrating sales feedback with product telemetry drives precision development and enterprise growth at CreditorWatch.

Andrew Hatfield

Andrew HatfieldApril 16, 2024

Building Decision Intelligence SystemsOld Dog, New Tricks: Mastering Double-Digit Growth for a 25 year old SaaS

Building Decision Intelligence SystemsOld Dog, New Tricks: Mastering Double-Digit Growth for a 25 year old SaaSHow a 25-year-old SaaS company drives double-digit growth through first-party buyer insights and Product-GTM alignment.

Andrew Hatfield

Andrew HatfieldApril 2, 2024