What 'Sovereign AI' Actually Means for Your Product Strategy

France is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

December 9, 2025

France is spending €109 billion on AI infrastructure.

Not AI features. Infrastructure.

Thirty-five datacenter sites across the country. Nuclear-powered compute clusters capable of 1 gigawatt each. FluidStack alone is building a €10 billion supercomputer scheduled for 2026. Microsoft committed €4 billion. EVROC pledged another €4 billion for 50,000 GPUs.

This isn't a single announcement. It's the culmination of a 7-year strategic arc. In 2018, the French government commissioned the Villani Report, which warned that France and Europe "may already appear to be 'digital colonies.'" In 2024, they committed €25 billion in public investment. At the February 2025 AI Action Summit, they announced an additional €109 billion in infrastructure commitments—combining government, enterprise, and private investment. That's €134 billion total to build sovereign AI capacity.

Meanwhile, your product team is debating whether to increase OpenAI API spend by 20%.

These aren't different conversations. They're the same conversation at different scales.

Nations investing billions have concluded that dependency on American AI infrastructure is an existential risk worth massive capital allocation to avoid. Most SaaS companies have concluded it's a procurement issue to be managed by finance.

One of these conclusions is dangerously wrong.

In This Series

- What 'Sovereign AI' Actually Means for Your Product Strategy — You are here

- The Dependency Architecture Being Built for Allies — How "sovereign" partnerships still concentrate control

- What National AI Strategy Teaches SaaS Companies — Six sovereignty principles that translate to product decisions

- The Constraint Nobody's Modeling — Why the window for architecture decisions is closing

The Sovereignty Rush Isn't About Nationalism

It's tempting to dismiss national AI investments as protectionism or political theatre. That reading misses the strategic substance.

France didn't commit €109 billion because they hate American technology. They committed €109 billion because they analyzed what happens when critical infrastructure is controlled by external parties.

European policy analyses consistently identify the same concerns: relying solely on U.S. cloud providers raises issues around data security and extraterritorial legal exposure (particularly under the US CLOUD Act), strategic dependence, and cost unpredictability amplified by tariff risks. These aren't abstract concerns—they're the dependency channels that determine whether you control your AI future.

Read that again. Data security. Strategic dependence. Cost unpredictability. Extraterritorial legal exposure.

Every one of those concerns applies to your SaaS product's AI architecture.

Singapore's National AI Strategy 2.0, published in December 2023, acknowledges a hard truth: "GPUs are in short supply, and we face intense global competition to access them."

Singapore is a sophisticated, well-resourced country. They looked at the compute landscape and concluded they cannot build sovereign infrastructure at France's scale. Instead, they're pursuing what they call "secure access"—strategic partnerships that preserve optionality rather than full independence.

India took a third path. Their NITI Aayog National Strategy for AI emphasizes "late-mover advantage"—the recognition that you don't need to pioneer if you architect strategically. Their framing: "Adapting and innovating the technology for India's unique needs and opportunities would help it in leap frogging, while simultaneously building the foundational R&D capability." These national strategies reveal six distinct sovereignty dimensions that translate directly to product architecture.

Three different countries. Three different approaches. But one common thread: all of them treat AI infrastructure dependency as a strategic question, not an operational one.

Your board almost certainly doesn't.

The "Sovereign" Definition Battle Happening Right Now

The word "sovereign" is being contested as we speak. And the definition that wins will shape your architecture decisions.

On December 4, 2025, OpenAI announced "OpenAI for Australia"—the first OpenAI for Countries program in Asia Pacific. They signed an MoU with NEXTDC to develop what they call "sovereign AI infrastructure" at a hyperscale campus in Sydney.

Sam Altman framed it as partnership:

"Through OpenAI for Australia, we are focused on accelerating the infrastructure, workforce skills and local ecosystem needed to turn that opportunity into long-term economic growth."

That same week, Simon Kriss at Sovereign Australia AI offered a different definition. His company ordered 256 Nvidia Blackwell B200 GPUs—the largest sovereign AI hardware deployment by an Australian company—and fine-tuned Llama 3.1 with 2 billion tokens of Australian data. His framing in the Sydney Morning Herald:

"We don't need to go head-to-head with OpenAI, and we don't want to. They're five years and several billion dollars ahead of us. But most Australian business use cases are simply a language model."

Two definitions of sovereign. One means American models running on local servers. The other means local models that you control.

Thomas Kelly, founder of Heidi Health and one of Australia's most visible AI-native founders, cut through the framing in his response to Australia's National AI Plan:

"Those who don't produce become AI consumers—and must accept prices subject to the monopolistic positions of others. That is not sovereignty."

That's the clearest articulation of the strategic question I've seen from a product leader.

If "sovereign" means data residency while models, updates, and capability roadmaps still flow from Redmond or San Francisco, you're not independent. You're a tenant with a local address.

If "sovereign" means the ability to switch providers, run alternative models, and control what your AI learns from your data—that requires different architecture entirely.

Which definition describes your product today?

The SaaS Sovereignty Gap

While nations invest billions to reduce AI dependency, most B2B SaaS companies are accelerating their dependency. Often without recognizing they're doing it.

The numbers tell the story. According to Emergence Capital's May 2024 research, 69% of B2B SaaS companies use OpenAI as their primary LLM. Our own survey of B2B SaaS executives in September 2025 found that 83% report high dependency on proprietary foundation model APIs.

Here's the concerning part: most of those same executives rate this dependency as moderate or low strategic risk.

That gap—high dependency, low perceived risk—is what I call dependency blindness. When everyone uses the same providers, the risk becomes invisible. It's like fish not noticing water.

James Pember, VP of Product at Komo, captured the disconnect in his reaction to Australia's National AI Plan: "The word 'union' appears 11 times, while the word 'startup' appears exactly 0 times... It speaks to 'capturing value', but not creating value."

His frustration points to a deeper pattern. Many governments and companies focus on how to capture value from AI rather than asking whether the architecture they're building allows them to create independent value at all.

Every sprint, you ship features. Every feature deepens API integration. Every integration increases switching costs. The dependency grows invisibly—each integration adding weeks to your switching cost—while your roadmap celebrates velocity. This is one of the three compounding forces squeezing SaaS companies, and the gap between shipping AI features versus building AI transformation widens with every release.

And your board? They're asking "are we shipping AI features?" Not "are we building dependencies that limit future options?"

What This Means for Your Architecture Decisions

The question isn't whether to pursue AI sovereignty. It's whether you'll architect for optionality now or pay 10x for remediation later.

We've seen this pattern before. The same platform consolidation pattern has repeated five times in 30 years—Microsoft in the 1990s, Google in the 2000s, Meta and AWS in the 2010s, and now OpenAI in the 2020s.

Companies that architected for cloud independence early—multi-cloud strategies, abstraction layers, portable data models—had 10x easier transitions when AWS lock-in became painful. Companies that went "all-in" on a single provider because it was faster are now spending billions on repatriation.

The same dynamic is playing out in AI, but faster. AWS dependency took 3-5 years to become painful. AI dependency is compressing that timeline to 12-24 months. The compute, talent, and policy constraints are tightening faster than most teams recognize.

France understood this. Their 7-year arc from diagnosis (2018) to major buildout (2025) reflects strategic patience—making architecture decisions when options were open, not scrambling when they'd already closed.

India understood it differently. Their "late-mover advantage" thesis recognizes that you don't need to pioneer if you learn from others' patterns. But you do need to architect strategically rather than defaulting to whatever's fastest.

Singapore understood their constraints. They can't build at France's scale, so they're optimizing for "secure access"—strategic relationships that preserve the ability to shift.

Each approach is valid for different contexts. What's not valid is pretending the decision doesn't exist.

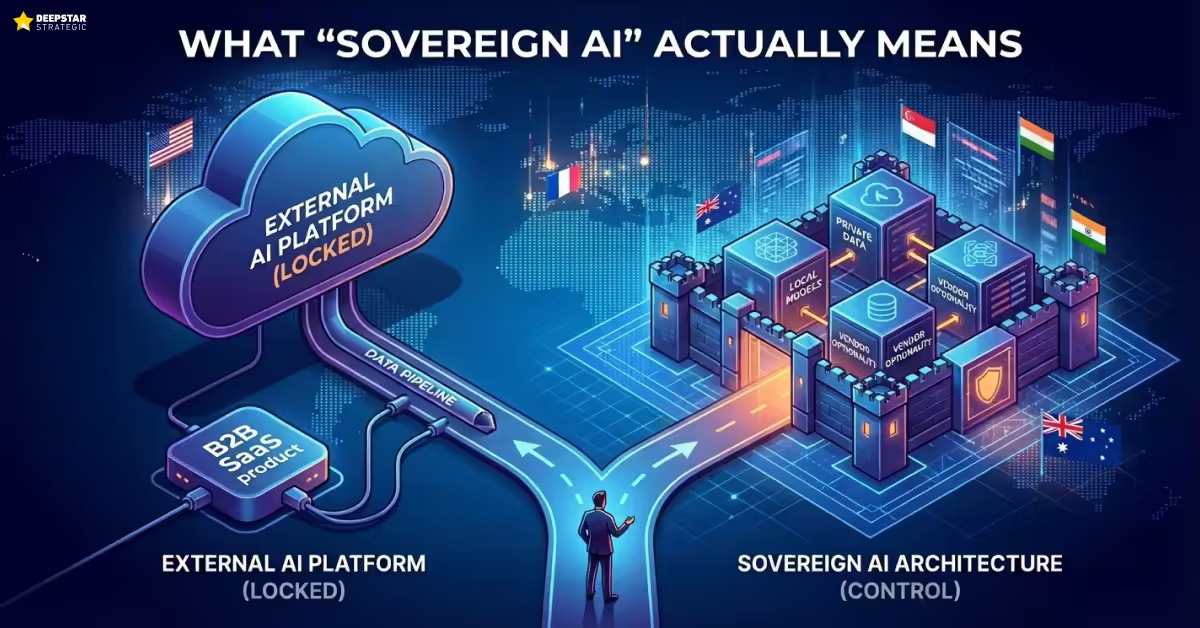

The Sovereignty Spectrum

Think about your AI architecture on a spectrum:

Full Dependence: Single provider, tightly coupled APIs, no abstraction layer. Switching would require rewriting core features. This is where the Triple Squeeze hits hardest.

Strategic Optionality: Multiple providers or abstraction layers that enable switching. Clear understanding of which capabilities could migrate and which couldn't.

Complete Independence: Self-hosted models, proprietary training data, full control over capability roadmap. No external dependencies for core intelligence.

Most SaaS companies today sit at full dependence while telling themselves they have optionality.

Three Questions for Your AI Steering Committee This Quarter

Before your next product planning cycle, get honest answers to these questions:

1. How many distinct foundation model providers do you currently use in production?

If the answer is one, you have concentration risk. If the answer is "we use OpenAI for everything," you have concentration risk that you're probably underweighting.

2. How much engineering effort would it take to switch primary providers?

Days to weeks suggests you've architected for optionality. Weeks to months suggests significant but achievable migration. Months to quarters means you've already made your choice—you just haven't acknowledged it.

3. What percentage of your AI features would break if your primary provider changed terms tomorrow?

Not "stopped working"—changed terms. Pricing. Data policies. Competitive restrictions. If the answer is "most of them," your product strategy is dependent on a contract you don't control.

The Strategic Reality

Nations are spending billions because they understand something most SaaS executives haven't internalized: in the AI era, dependency isn't a procurement issue. It's a survival question.

The architecture decisions you make this quarter will determine whether you have strategic options in three years—or whether you've already made them for your platform providers.

France concluded that €109 billion was a reasonable price for AI independence. Singapore concluded they couldn't match that investment but needed to preserve optionality through strategic partnerships. India concluded they could architect for late-mover advantage without pioneering infrastructure.

What's your company's conclusion?

And who on your team is actually running that analysis?

But here's where it gets complicated. The "solutions" being positioned for allies—the frameworks and partnerships designed to give friendly nations AI access—still concentrate power in ways that matter. The dependency architecture being built for allies is more subtle than outright lock-in, but the strategic implications are just as significant.

That's what we'll examine in The Dependency Architecture Being Built for Allies.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Constraint Nobody's Modeling

The State of SaaS in the Age of AIThe Constraint Nobody's ModelingEvery AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for Allies

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for AlliesOpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS Companies

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS CompaniesFrance, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited Risk

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited RiskOpenAI says the Mixpanel breach wasn't their breach. Technically correct. Strategically irrelevant. Your architecture inherits every weakness in your vendor's supply chain.

Andrew Hatfield

Andrew HatfieldNovember 27, 2025