The Dependency Architecture Being Built for Allies

OpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

December 9, 2025

This is Part 2 of the AI Sovereignty series. Start there for the strategic foundation.

OpenAI's "sovereign deployment options" sound like independence. Microsoft's "AI Access Principles" promise democratized AI for allied nations. The US government's AI Action Plan describes these as partnerships designed to counter Chinese influence.

Read the actual documents and a different picture emerges.

The Trump administration's July 2025 AI Action Plan states the goal explicitly:

"Export its full AI technology stack—hardware, models, software, applications, and standards—to all countries willing to join America's AI alliance."

That's not a sovereignty framework. That's a dependency architecture with allied nations as the customers.

The question isn't whether these partnerships offer value—they do. Access to cutting-edge AI capabilities, reduced development costs, faster time-to-market. The question is whether "sovereign AI infrastructure" means what you think it means, and what the architecture actually implies for your strategic options.

What "Allied AI Access" Actually Means

The US approach to AI diplomacy is explicit—if you read the source documents.

The AI Action Plan opens with a framing that leaves little room for interpretation:

"Whoever has the largest AI ecosystem will set global AI standards and reap broad economic and military benefits. Just like we won the space race, it is imperative that the United States and its allies win this race."

This isn't partnership language. It's dominance language. And the mechanism for achieving that dominance is equally clear: ensure allies build on American technology rather than alternatives.

The plan calls for exporting "full-stack American AI technology packages" to allied nations. Full stack means hardware, models, software, applications, and standards. Every layer controlled by American providers.

VP Vance articulated the administration's priority at the Paris AI Summit in February 2025: focusing on "AI opportunity" rather than safety frameworks. This reflects a policy philosophy that views acceleration as more important than precautionary governance.

Readers will have their own views on which approach is correct. The business-relevant point is that either approach could be reversed in four years—and companies cannot build architecture that depends on either philosophy persisting.

None of this is hidden. It's stated policy. The question is whether companies building on these frameworks have read the policy and understood what it implies.

The Three Dependency Channels That Remain

Even when AI infrastructure is positioned as "sovereign," three dependency channels typically remain intact. Understanding these channels reveals why local data residency doesn't equal strategic independence—and why the Triple Squeeze catches so many companies off guard.

Channel 1: Compute Infrastructure

The Stargate project—announced January 21, 2025—represents the largest AI infrastructure commitment in history. $500 billion over four years. 7 gigawatts of planned capacity. OpenAI, SoftBank, Oracle, and MGX as partners.

Here's the detail that matters most: according to the Financial Times, OpenAI intends to be the customer for all of the new capacity—which it needs to train and run its AI models.

That's not neutral infrastructure. That's captive capacity.

When OpenAI announces "sovereign AI infrastructure" partnerships in Australia, UAE, Norway, or the UK, the compute capacity connects back to this architecture. Local data centers, yes. But the capacity allocation, the model access, the capability roadmap—all flow from infrastructure that serves OpenAI's interests first.

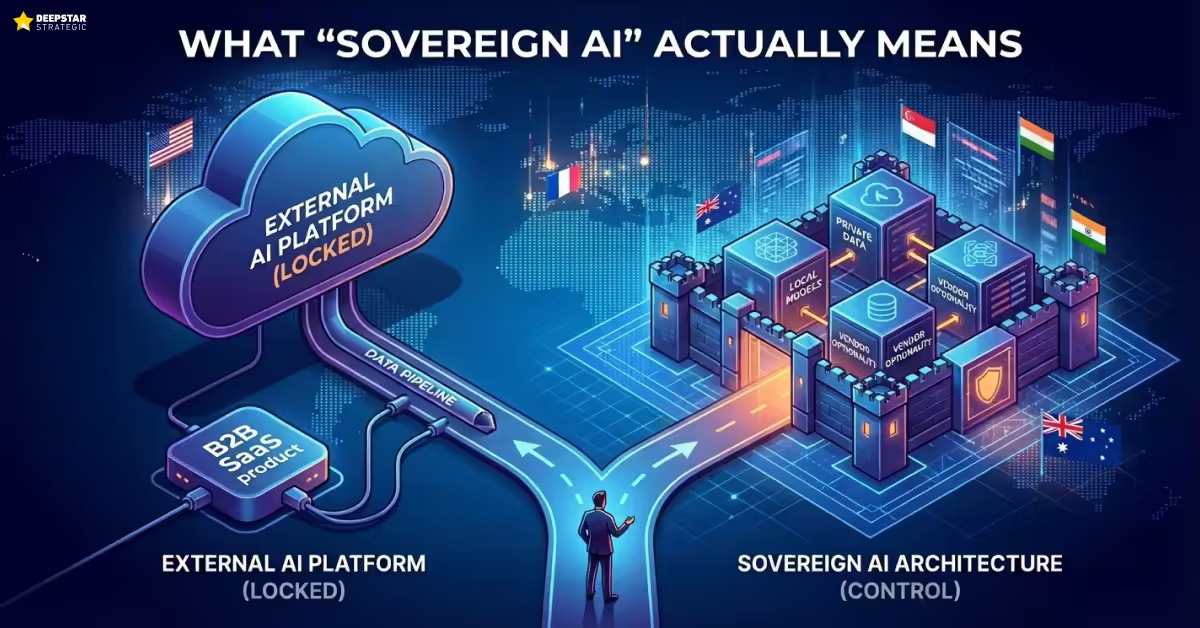

France's €109 billion investment looks different through this lens. They're building 35 datacenter sites because they don't want their compute capacity subject to another entity's allocation decisions. As we explored in What 'Sovereign AI' Actually Means for Your Product Strategy, this is the difference between geographic sovereignty and strategic sovereignty.

Channel 2: Model Updates and Capability

When you deploy OpenAI, Microsoft, or Google models—even in "sovereign" configurations—you don't control the training, the weights, or the capability roadmap. Every update flows from the provider.

This seems like a feature, not a bug. You get continuous improvement without engineering investment.

But consider what you're accepting: your product capabilities are determined by decisions made in San Francisco, Redmond, or Mountain View. If the next model update changes performance characteristics, pricing, or terms, you adapt or you rebuild.

Microsoft's Azure "sovereign" offerings provide local data residency. The marketing emphasizes that your data stays in-country. But model updates, security patches, and capability improvements still flow from Microsoft. The sovereignty is geographic, not strategic.

This is the "hybrid cloud" pattern from 2012-2020 applied to AI. It looked like flexibility. It preserved vendor control where it mattered.

Channel 3: Telemetry and Learning

Every API call teaches your provider something. Every error, every edge case, every successful application becomes data that improves their models and informs their product roadmap. And the exposure extends beyond intentional data sharing—when Mixpanel was breached in November 2025, OpenAI API customers' data was compromised through a vendor they'd never evaluated.

OpenAI processes billions of API calls monthly. That's not just revenue—it's the largest AI training dataset ever assembled, contributed by customers who are paying for the privilege of providing it.

This is the same dynamic that let AWS identify which services to build natively. They called it "offer/watch/copy." Offer primitive services. Watch what customers build. Copy the successful patterns as native features. The pattern has repeated five times in 30 years—and each cycle compresses the timeline.

With AI, the learning is more direct. They don't just see what you're building—they see the prompts, the use cases, the failure modes. Every optimization you discover becomes training data for their next model.

OpenAI for Australia will train 1.2 million Australian workers and small business customers through the OpenAI Academy, with partnerships including Commonwealth Bank (AI training for 1 million small business customers), Coles (tailored training for entire workforce), and Wesfarmers (training for 118,000 employees). That's valuable upskilling. It's also 1.2 million people learning to work within OpenAI's paradigm, creating organizational lock-in that extends far beyond technical architecture.

The Policy Instability You're Not Modeling

Here's a constraint that doesn't appear in most AI strategy discussions: the policy environment you're building on can reverse completely in hours.

On October 30, 2023, the Biden administration issued Executive Order 14110—a comprehensive framework for AI safety and governance. It established reporting requirements for frontier models, created the AI Safety Institute within NIST, mandated equity considerations, and included worker protections referencing collective bargaining rights.

On January 20, 2025, the Trump administration revoked it. Day one. The entire framework disappeared.

The Policy Swing in Detail

The reversals reflect fundamentally different policy philosophies:

| Policy Element | Biden EO 14110 (Oct 2023) | Trump AI Action Plan (Jul 2025) |

|---|---|---|

| Regulatory philosophy | Reporting requirements for frontier models | Reduced compliance burden |

| Institutional focus | Safety research and testing (AISI) | Standards and innovation (CAISI) |

| Social considerations | Equity and civil rights provisions | "Free from ideological bias" |

| Framework guidance | Included misinformation, DEI, climate references | Narrowed to technical standards |

| Workforce approach | Worker protections including collective bargaining | Workforce retraining programs |

What Changed Institutionally

The AI Safety Institute was restructured and renamed to the Center for AI Standards and Innovation—reflecting a shift from risk mitigation to capability development. February 2025 reports indicated significant staff reductions at NIST, including most AISI personnel.

The Business-Relevant Takeaway

This analysis takes no position on which approach is correct. Reasonable people disagree on the proper balance between innovation speed and precautionary governance.

But the fact that matters for your architecture: US AI policy swung 180 degrees in hours. It could swing again in four years. Or sooner if political conditions change.

Your AI architecture will outlast multiple administrations. Which policy environment are you building for?

The State-Level Complication

The regulatory vacuum at the federal level isn't creating clarity—it's creating fragmentation.

While the federal government may slow regulatory enforcement, states are likely to fill the void. California, Colorado, and other states are advancing their own AI regulations. A patchwork of state requirements may prove more constraining than a single federal framework would have been.

If your AI architecture depends on stable policy, you're depending on something that demonstrably doesn't exist. This policy volatility is one of the compounding forces that makes delayed architecture decisions so costly.

What This Means for Your Architecture Decisions

The allied AI framework isn't inherently bad. Criticizing it as pure dependency creation misses real value: access to capabilities you couldn't build, faster time-to-market, reduced engineering investment.

The mistake is treating alliance as sovereignty.

Singapore's government understood this distinction. Their National AI Strategy 2.0 explicitly acknowledges they cannot build sovereign compute at scale. Their strategy is "secure access"—strategic partnerships that preserve optionality rather than claiming independence they don't have. Thomas Kelly's framing applies here too: accepting prices subject to monopolistic positions isn't sovereignty.

That's honest architecture. They know they're dependent. They're managing dependency strategically rather than pretending it doesn't exist.

When Alliance Makes Strategic Sense

Alliance makes sense for capabilities where dependency doesn't threaten your competitive position:

- Non-differentiating features: AI capabilities that every competitor will have. Speed matters more than control.

- Rapid prototyping: Testing use cases before committing architecture. Learning what works.

- Capabilities you genuinely can't build: Frontier model performance that requires infrastructure you'll never have.

When Independence Is Required

Independence is required for capabilities where dependency threatens your competitive position:

- Competitive crown jewels: Algorithms, data relationships, and decision logic that differentiate you. If your provider learns these, they learn your business.

- Decision intelligence: Systems that don't just enable workflows but make judgments. The learning from these systems is your moat.

- Anything that becomes your moat: If it's what makes you defensible, it can't depend on someone with every incentive to learn from you.

The Architecture That Preserves Optionality

The strategic response isn't "avoid all platforms" or "build everything yourself." It's architecture that acknowledges dependency while preserving the ability to shift.

Abstraction layers: Interface definitions that let you swap providers without rewriting features. The investment is weeks of engineering. The payoff is strategic flexibility.

Multi-provider capability: Even basic ability to route requests to alternative providers changes your negotiating position and your risk profile.

Clear sensitivity classification: Know which features could migrate to open source models, which require provider capabilities, and which need complete independence. Different tiers, different architectures.

Explicit dependency tracking: Most companies can't answer "what percentage of our AI features break if OpenAI changes terms?" If you can't answer that question, you can't make informed architecture decisions.

The Honest Trade-Off

Allied AI access is a trade-off, not a solution.

You get: cutting-edge capabilities, faster development, lower upfront investment, access to infrastructure you couldn't build.

You accept: strategic dependency on providers whose interests may diverge from yours, policy exposure in a volatile environment, learning flows that benefit your provider's competitive intelligence.

The companies that will navigate this well aren't the ones who avoid the trade-off. They're the ones who make it consciously, with architecture that preserves the ability to shift when conditions change.

France decided €109 billion was worth spending to avoid the trade-off entirely. Singapore decided to accept it while managing exposure carefully. India decided to architect for late-mover advantage while building foundational capability.

What's your conscious decision? And can you articulate the architecture that implements it?

Nations that recognized the distinction between alliance and sovereignty years ago are publishing sophisticated strategies for managing the trade-off. Their conclusions—developed with billions in research budgets—offer a playbook that SaaS companies can adapt.

That's what we'll extract in What National AI Strategy Teaches SaaS Companies.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Constraint Nobody's Modeling

The State of SaaS in the Age of AIThe Constraint Nobody's ModelingEvery AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS Companies

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS CompaniesFrance, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product Strategy

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product StrategyFrance is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited Risk

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited RiskOpenAI says the Mixpanel breach wasn't their breach. Technically correct. Strategically irrelevant. Your architecture inherits every weakness in your vendor's supply chain.

Andrew Hatfield

Andrew HatfieldNovember 27, 2025