The Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited Risk

OpenAI says the Mixpanel breach wasn't their breach. Technically correct. Strategically irrelevant. Your architecture inherits every weakness in your vendor's supply chain.

Andrew Hatfield

November 27, 2025

Technically Correct. Strategically Irrelevant.

OpenAI says the Mixpanel breach wasn't their breach.

Technically correct. Strategically irrelevant.

If you're an OpenAI API customer, your data was still compromised through their vendor ecosystem. You had no visibility into Mixpanel's security practices. You couldn't evaluate a vendor you didn't know existed in your dependency chain. And you learned about it 18 days after the attackers got your information.

Here's what actually happened.

The Dependency Chain Most AI Strategies Ignore

Mixpanel's JavaScript was embedded in platform.openai.com's frontend. Every time you logged into your OpenAI API account, checked your usage dashboard, or managed your organization settings—Mixpanel tracked that session. A third party you never evaluated was collecting data about your team, your company structure, and your technical environment.

The dependency chain looked like this:

Your Product ──▶ OpenAI API ──▶ OpenAI Frontend ──▶ Mixpanel ──▶ BREACH

│ │ │ │ │

▼ ▼ ▼ ▼ ▼

✅ You ✅ You ❌ You didn't ❌ You didn't ⚠️ You

control evaluated know about evaluate inherited

You probably evaluated OpenAI. You might have reviewed their SOC 2 report, their data processing agreement, their security documentation. But did you evaluate Mixpanel? Did you even know Mixpanel was in the chain?

Here's the thing: Mixpanel wasn't even listed on OpenAI's official sub-processor list. That list includes 15+ vendors—Microsoft, CoreWeave, Oracle, Google Cloud, AWS, Snowflake, TaskUs, Intercom, Salesforce, Accenture, and others. These are the vendors OpenAI formally discloses as processing customer data under their Data Processing Agreement.

Mixpanel was frontend analytics. Not a formal sub-processor. Not disclosed. Not on any list you could have reviewed.

Your vendor's vendor ecosystem extends far beyond what they're contractually required to disclose.

On November 9, an attacker gained unauthorized access to Mixpanel's systems and exported a dataset containing customer identifiable information. Your information. Information about companies building on OpenAI's API—which means information about companies building AI into their products. High-value targets.

The Timeline: 18 Days of Exposure You Couldn't See

Here's how the disclosure cascade played out:

| Date | Event | Days Elapsed | Your Visibility |

|---|---|---|---|

| Nov 9 | Mixpanel detects breach | Day 0 | 🔴 None |

| Nov 10-24 | Mixpanel investigates internally | Days 1-15 | 🔴 None |

| Nov 25 | Mixpanel shares data with OpenAI | Day 16 | 🔴 None |

| Nov 26 | OpenAI notifies customers | Day 17 | 🟡 First notification |

| Nov 27 | You're reading this | Day 18 | 🟢 Now aware |

That's 18 days between breach detection and customer notification. Eighteen days where attackers had your data and you had no idea. Eighteen days where you couldn't take protective action because you didn't know protective action was needed.

And here's the part that should concern you: you had zero visibility into any of it. You couldn't accelerate Mixpanel's internal investigation. You couldn't pressure them to share data faster with OpenAI. You couldn't demand OpenAI notify you sooner. You inherited the entire disclosure timeline of vendors you didn't know existed.

What Was Actually Exposed

OpenAI's disclosure emphasized what wasn't compromised: no chat content, no API requests, no passwords, no API keys, no payment details. And that's true. The core API infrastructure wasn't breached.

But here's what was exposed through Mixpanel:

- Names and email addresses of OpenAI API account holders

- Organization IDs revealing company structures and team relationships

- Approximate location (city, state, country) based on browser data

- Operating system and browser information for each user

- Referring websites showing what tools and workflows brought users to the platform

This isn't random data. This is a precisely targeted intelligence package.

The attackers now know exactly which companies are building on OpenAI's API. They know who the developers and administrators are at those companies. They know the technical environment those people work in. They know what other tools those people use.

This isn't spam fodder. This is a curated spear-phishing kit for AI developers and the companies employing them.

This Wasn't the Attack. It Was Reconnaissance.

Most breach analyses focus on what was stolen. But the real risk here isn't the data that was exposed—it's what that data enables next.

Think about what the attackers now have:

A verified list of high-value targets. OpenAI API customers aren't random consumers. They're companies building AI into their products—often well-funded, often handling sensitive data, often attractive targets for further exploitation.

Organizational context. The Organization IDs reveal company structures. The attackers can map out who works where, who has administrative access, who the decision-makers might be.

Technical fingerprints. Browser and OS information enables targeted payloads. The attackers know whether you're on Chrome or Firefox, Windows or Mac. They can craft exploits specific to your environment.

Workflow intelligence. Referring websites reveal your tool stack. If attackers know you came to OpenAI from your company's internal dashboard, they know something about your infrastructure. If you came from a specific development tool, they know what you're building with.

Now consider the second-order risk.

If there's ever a vulnerability in OpenAI's platform—or in any of the other tools these companies use—the attackers already have their target list ready. They don't need to do reconnaissance. They've already done it. This breach gave them a pre-qualified database of exactly who to attack and how to reach them.

The Mixpanel breach wasn't the attack. It was preparation for attacks that haven't happened yet.

The Buried Lede in OpenAI's Response

OpenAI's disclosure included a statement that deserves more attention than it's getting:

"Beyond Mixpanel, we are conducting additional and expanded security reviews across our vendor ecosystem and are elevating security requirements for all partners and vendors."

Read that carefully.

They're now conducting expanded security reviews. They're now elevating security requirements.

Which raises an obvious question: what were the security reviews and requirements before this incident?

OpenAI is the most consequential AI infrastructure provider on the planet. Hundreds of thousands of developers and companies build on their API. Their platform processes some of the most sensitive workflows in technology—code generation, document analysis, customer communications, strategic planning.

And their vendor ecosystem security reviews apparently weren't rigorous enough to prevent a third-party analytics provider from exposing customer data for 18 days.

As Vaughan Shanks, CEO of cybersecurity firm Cydarm Technologies, noted:

We should expect that a company with the profile and resources of OpenAI would do a much better job of supply chain security. The breach notification SLA should be much better than 18 days. But even if OpenAI is now getting service credits from Mixpanel, this doesn't compensate the real victims—OpenAI's customers.

That's the asymmetry no one talks about.

OpenAI makes Mixpanel whole. But who makes the customers whole?

Risk flows downstream. Compensation doesn't.

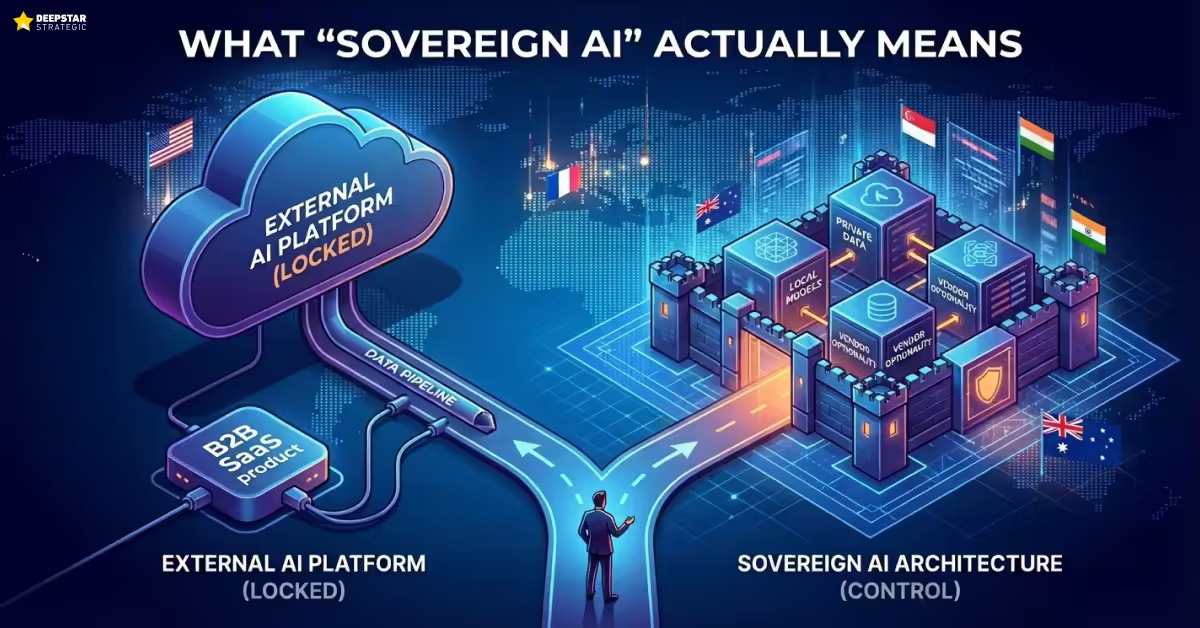

This is why nations are investing billions in AI infrastructure sovereignty—France committed €109 billion because they analyzed what happens when critical infrastructure is controlled by external parties with vendor ecosystems you can't see. If governments treat platform dependency as an existential risk requiring €100B+ responses, why would your product team treat 18-day breach notification windows as acceptable?

The Pattern You've Seen Before

This isn't an indictment of OpenAI specifically. It's a reflection of how platform dependency actually works. When you build on a platform, you're not just trusting that platform. You're trusting every vendor that platform trusts. Every analytics tool. Every monitoring service. Every infrastructure provider. Every third-party JavaScript snippet embedded in their frontend.

You're trusting an ecosystem you can't see and can't evaluate.

I've watched this inheritance pattern play out across three platform consolidation cycles over 30 years. The mechanism is always the same: companies build on platforms, platforms have vendors, vendors have vulnerabilities, and the risk flows downstream to customers who never knew the vendors existed.

Microsoft Era (1990s)

Companies that built on Microsoft infrastructure inherited Microsoft's security vulnerabilities. When a Windows exploit emerged, every company running Windows was exposed. Patch Tuesday became everyone's emergency—not because they chose to be dependent on Microsoft's patch schedule, but because their architecture made that dependency unavoidable.

The companies most affected weren't careless. They were efficient. They'd standardized on a platform to reduce complexity. That standardization meant they inherited every weakness in Microsoft's codebase and every delay in Microsoft's patch process.

AWS Era (2010s)

Marketplace vendors and companies building on AWS inherited AWS's compliance obligations and security model. The "shared responsibility model" created ambiguity about where AWS's security ended and yours began. S3 bucket misconfigurations—often in third-party tools—exposed customer data downstream.

When AWS had outages or security incidents, every company building on affected services felt the impact. You couldn't diversify away from the dependency because your architecture was built around AWS-specific services. You inherited the risk whether you understood it or not.

OpenAI Era (2020s)

Now we're seeing the same pattern with foundation model APIs. Companies building on OpenAI's API inherit the security posture of OpenAI's entire vendor ecosystem. You inherit their disclosure timelines. You inherit their vendor relationships. You inherit risks from companies like Mixpanel that you've never heard of and have no contractual relationship with.

The pattern compounds with each cycle. Platforms get more powerful. Dependencies get deeper. Vendor ecosystems get more complex. And the risk that flows downstream to customers gets harder to see and harder to evaluate.

| Era | Platform | Inherited Risk Example | Timeline to Impact |

|---|---|---|---|

| 1990s | Microsoft | Windows vulnerabilities → Patch Tuesday emergencies | 5-7 years |

| 2010s | AWS | S3 misconfigurations → downstream data exposure | 3-5 years |

| 2020s | OpenAI | Mixpanel breach → 18-day exposure window | 12-18 months |

The Four Dimensions of Platform Dependency Risk

Most conversations about AI platform dependency focus on two dimensions: competitive risk and cost risk.

Competitive risk: Every API call teaches the platform provider your workflows. They learn what you're building, how your customers use it, what patterns drive value. That intelligence helps them decide what to build natively—potentially competing with you using knowledge you provided.

Cost risk: Usage-based pricing creates budget unpredictability. As your product succeeds and usage grows, your costs grow too—sometimes faster than revenue. You're dependent on pricing decisions you don't control.

These risks are real. They're core to the Triple Squeeze facing B2B SaaS companies—alongside pressure from AI-native competitors and customers building DIY replacements.

But the Mixpanel incident reveals two additional dimensions that most companies aren't evaluating:

Disclosure timeline inheritance: You don't just inherit your vendor's security posture. You inherit their disclosure timelines. When Mixpanel took 16 days to share data with OpenAI, every OpenAI customer inherited that delay. You can't negotiate faster notification with vendors you don't know exist.

Security surface area expansion: Your attack surface isn't just your code and your infrastructure. It includes every vendor in your platform provider's supply chain. Every analytics tool, every monitoring service, every third-party script. A breach anywhere in that chain can expose your data, your customers, and your business.

| Dimension | Risk | What You Inherit | Can You Evaluate? |

|---|---|---|---|

| Competitive Intelligence | Platform learns your workflows | Usage patterns, feature logic | ⚠️ Partially |

| Cost Unpredictability | Usage-based pricing volatility | Pricing decisions you don't control | ⚠️ Partially |

| Disclosure Timeline | Delayed breach notification | Vendor-to-vendor notification SLAs | ❌ No visibility |

| Security Surface Area | Expanded attack vectors | Every vendor in provider's ecosystem | ❌ No visibility |

These four dimensions compound. Competitive risk grows as you send more data through the API. Cost risk grows as usage increases. Disclosure timeline risk grows as vendor ecosystems become more complex. Security surface area grows as platforms add more third-party integrations.

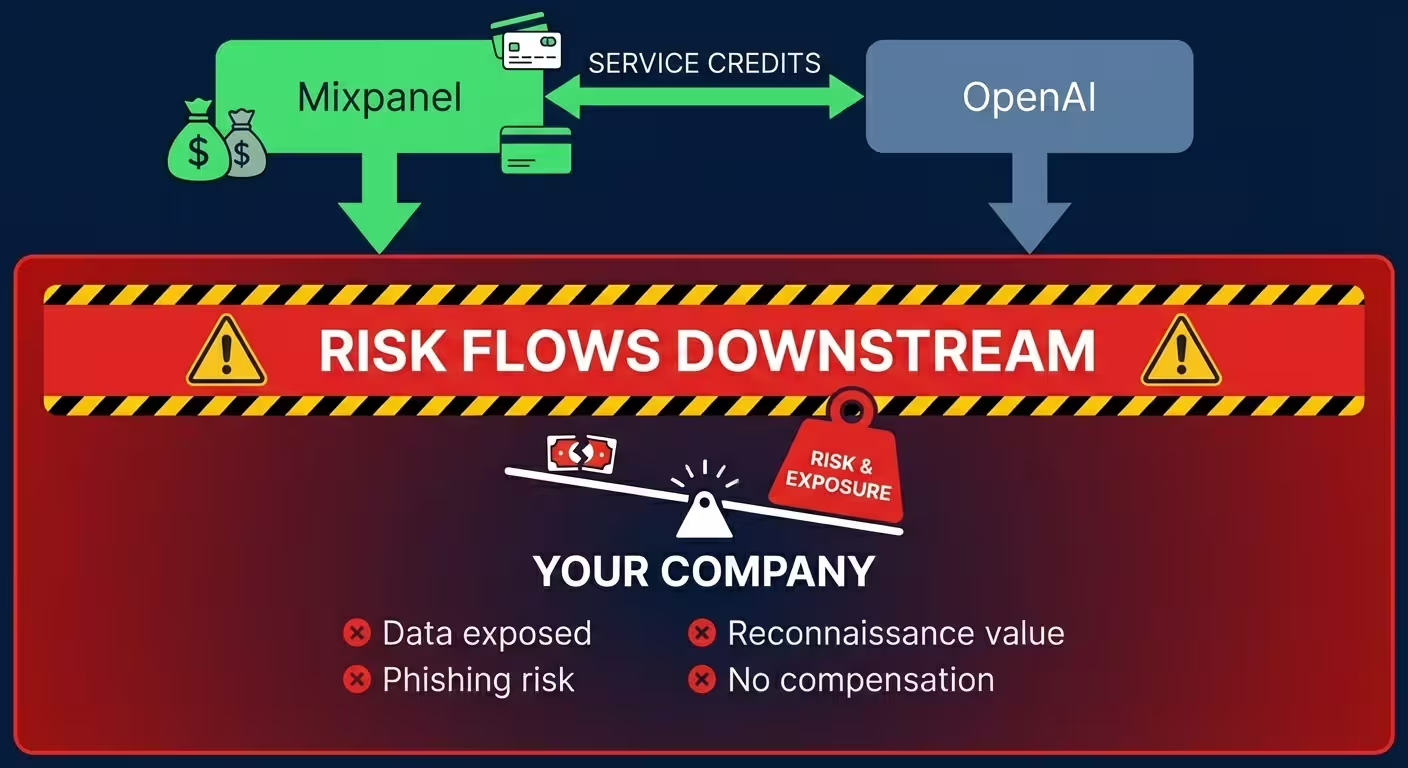

And here's the asymmetry that makes it worse:

Service credits flow between vendors. OpenAI will likely receive some form of compensation from Mixpanel for this incident—contractual remedies, service credits, whatever their agreement specifies.

Risk flows downstream to customers. The exposure, the phishing risk, the reconnaissance value—all of that lands on OpenAI's customers.

Compensation doesn't flow downstream. You inherited the breach. You inherited the risk. But you won't see any of the remediation that flows between the vendors who actually have contractual relationships.

Risk flows downstream. Compensation doesn't.

What This Means for Your Architecture

If you're a CTO or CPO at a company building on foundation model APIs, this incident should change how you evaluate platform dependency.

First, recognize that vendor evaluation is incomplete by definition. You can review your AI provider's SOC 2 report. You can negotiate data processing agreements. You can audit their security practices. But you cannot evaluate their vendor ecosystem because you don't have visibility into it. You're trusting a chain you can only see one link of.

Second, understand that you inherit timelines you can't control. Disclosure SLAs in your contract with OpenAI don't help when the breach happens at Mixpanel. The 18-day exposure window in this incident wasn't a failure of your security practices—it was a structural feature of your dependency chain.

Third, factor reconnaissance value into breach impact assessment. This incident didn't expose your customer data or your API keys. But it gave attackers a target list that makes future attacks on your company more likely and more effective. The data that was exposed has value beyond its immediate sensitivity.

Fourth, consider concentration risk. The more of your stack that depends on a single platform provider, the more you inherit from their entire ecosystem. Multi-provider strategies don't eliminate platform dependency, but they can reduce the blast radius when one provider's vendor ecosystem fails. Building decision intelligence with a three-tier model selection strategy gives you architectural options beyond full platform dependency.

Fifth, build visibility into your dependency map. You may not be able to evaluate your provider's vendors, but you can at least know what data flows where. What information does your OpenAI integration expose about your team? What would an attacker learn from breaching your platform provider's analytics tools?

None of this means you shouldn't build on foundation model APIs. The capabilities are real. The productivity gains are real. The competitive pressure to ship AI features is real.

But platform dependency is a strategic decision with security implications that extend far beyond the platform itself. Your architecture inherits every weakness in your vendor's supply chain. That's not fear-mongering. It's a structural feature of how platforms work.

Are You Building Copilots or Captains?Are You Building Copilots or Captains?

Assess whether your AI features optimize workflows anyone can copy or own decisions only you can make.

The Question You Should Be Asking

When you evaluate an AI platform provider, you probably review their security documentation. Their compliance certifications. Maybe their architecture diagrams.

But can you answer this question: Who are your AI provider's vendors, and what data do they collect about you?

You probably can't. I couldn't. Neither could most of the CTOs and CPOs building on these platforms.

That's the gap this incident exposes. Not a failure of security practices by any single company—but a structural blindness built into how platform dependency works.

OpenAI will improve their vendor security reviews. They said as much. Mixpanel will improve their security practices. They'll have to. The immediate vulnerability will get addressed.

But the structural issue remains. When you build on a platform, you trust everyone that platform trusts. You inherit their disclosure timelines. You expand your attack surface to include their entire vendor ecosystem. And when something goes wrong, risk flows downstream while compensation doesn't.

The question isn't "is OpenAI secure?"

It's "is everyone OpenAI trusts secure?"

Your architecture inherits every weakness in your vendor's supply chain.

Including the ones you can't see.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Constraint Nobody's Modeling

The State of SaaS in the Age of AIThe Constraint Nobody's ModelingEvery AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for Allies

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for AlliesOpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS Companies

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS CompaniesFrance, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product Strategy

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product StrategyFrance is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025