What National AI Strategy Teaches SaaS Companies

France, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

December 9, 2025

This is Part 3 of the AI Sovereignty series. Start there for the strategic foundation.

France, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. McKinsey, BCG, and specialist policy firms produced thousands of pages analyzing dependency risks, architectural options, and strategic priorities.

The conclusions are public. They're free to read.

And they translate directly to your product architecture decisions.

Most SaaS product teams haven't read a single one.

That's a missed opportunity. These aren't abstract policy documents created by bureaucrats detached from commercial reality. They're strategic playbooks developed by people with bigger research budgets than your company's entire revenue, analyzing the same dependency problem you face at different scale.

Cross-analyzing these strategies reveals consistent principles—not because governments coordinate, but because they're all solving the same fundamental problem: how to benefit from AI platforms while preserving the ability to act independently.

The Six Sovereignties Framework

When you analyze national AI strategies systematically, "sovereignty" fragments into six distinct capabilities. Each can be achieved through different means. Each has different implications for your architecture.

Understanding this framework changes how you evaluate your own AI posture.

1. Sovereignty of Infrastructure

Where your data is physically processed and stored.

France is building 35 datacenter sites, committing €109 billion to ensure compute happens on French soil with French control. Australia partnered with NEXTDC and OpenAI for local processing while accepting American model control. Singapore acknowledged they can't build at scale and are pursuing "secure access" to others' infrastructure. Each represents a different answer to what sovereignty actually means.

For your product: Do you know where your AI inference happens? Do you have options if that location becomes problematic—whether for regulatory, cost, or strategic reasons?

2. Sovereignty of Oversight

Who governs usage, audits systems, and mandates standards.

Singapore created AI Verify—the world's first AI governance testing framework—in 2022. They can't build sovereign compute, but they can define how AI systems operating in Singapore must behave. The UK introduced the Algorithmic Transparency Standard. Australia is applying existing consumer, privacy, and workplace law rather than creating new AI-specific regulation.

For your product: Regardless of which models you use, do you have internal governance frameworks? Testing protocols? Audit capabilities? Oversight doesn't require infrastructure independence.

3. Sovereignty of Capability

Domestic alternatives exist for critical use cases.

France invested in Mistral as a European alternative to American foundation models. Simon Kriss at Sovereign Australia AI fine-tuned Llama with 2 billion Australian tokens—recognizing that "most Australian business use cases are simply a language model" that doesn't require competing head-to-head with OpenAI. India proposed AIRAWAT—a cloud platform for big data analytics with AI computing infrastructure to enable domestic AI development.

For your product: For your most critical AI capabilities, do alternatives exist that you could switch to? Have you tested them? Could you migrate in weeks rather than quarters?

4. Sovereignty of Validation

Adversarial models can challenge outputs.

This is counterintuitive: validation sovereignty actually requires access to multiple models, not independence from all of them. If you only have one AI provider, you can't cross-check outputs. You can't catch hallucinations systematically. You can't validate that results are reasonable.

The Deloitte incident in Australia—where AI-generated errors including fabricated court quotes and nonexistent academic references appeared in a $440,000 government report—happened partly because single-model workflows have no adversarial check.

For your product: Can you validate AI outputs against alternative models? Do you have systematic cross-checking for high-stakes decisions?

5. Sovereignty of Literacy

Understanding what you're deploying and how to architect properly.

OpenAI for Australia will train 1.2 million workers and small business customers through CommBank, Coles, and Wesfarmers partnerships. Singapore runs national AI upskilling initiatives. India projected that 46% of their workforce would be in entirely new jobs or radically changed roles by 2022.

Literacy sovereignty isn't about rejecting AI—it's about having enough internal expertise to make informed decisions rather than accepting vendor recommendations uncritically.

For your product: Does your team understand AI architecture well enough to evaluate trade-offs? Or are you dependent on provider guidance for decisions that determine your strategic options?

6. Sovereignty of Choice

The ability to shift between providers, architectures, and partnerships based on evolving needs.

This is the meta-sovereignty. It's what enables all the others.

France's massive infrastructure investment is ultimately about choice—ensuring they can choose European providers, American providers, or domestic alternatives based on circumstances rather than lock-in. Singapore's "secure access" strategy explicitly preserves optionality. India's "late-mover advantage" thesis is fundamentally about choosing when and how to adopt rather than being forced by dependency.

For your product: If conditions changed tomorrow—pricing, terms, competitive dynamics, regulation—how much freedom do you have to respond? That's your sovereignty of choice.

The Key Insight

These six sovereignties don't all require the same solution.

You can have foreign models (benefiting from Sovereignty 4's validation capability) running on domestic infrastructure (Sovereignty 1) under local oversight (Sovereignty 2) with domestic alternatives available (Sovereignty 3) if you've built internal literacy (Sovereignty 5) and preserved the ability to shift (Sovereignty 6).

The goal isn't maximizing every dimension. It's intentionally choosing which matter for your context and architecting accordingly.

Translating National Principles to Product Architecture

Each national strategy element maps to a specific SaaS architecture decision. This isn't abstract—it's implementation guidance.

| National Principle | SaaS Architecture Equivalent |

|---|---|

| Compute optionality (France: 35 sites, not 1) | Multi-provider infrastructure; no single point of failure |

| Model flexibility (Mistral as alternative) | Abstraction layer enabling provider switching |

| Data sovereignty (control the value chain) | Proprietary data architecture; clear data flow governance |

| Capability stacking (build on top of access) | Decision intelligence layer using but not dependent on models |

| Governance frameworks (Singapore AI Verify) | Internal AI governance, testing, and validation protocols |

| Strategic patience (France 7-year arc) | Architecture decisions aligned to 3-5 year horizon, not sprint velocity |

Compute Optionality → Multi-Provider Strategy

France isn't building one massive datacenter. They're building 35 sites distributed across the country. The rationale: no single point of failure, no single chokepoint for control.

Your equivalent: can your AI workloads run on multiple providers? If AWS has an outage, if Azure changes terms, if your primary model provider gets acquired—do you have options?

This doesn't require running everything everywhere. It requires architecture that could shift if needed.

Model Flexibility → Abstraction Layer Design

France invested in Mistral not because Mistral is necessarily better than GPT-5, but because having a European alternative preserves options. The alternative doesn't need to be equivalent—it needs to exist.

Your equivalent: an abstraction layer that defines interfaces independent of specific providers. When you call your AI services, does your code know it's talking to OpenAI? Or does it talk to an internal interface that could route to OpenAI, Anthropic, open-source models, or self-hosted alternatives?

The engineering investment is measured in weeks. The strategic value is measured in years.

Data Sovereignty → Proprietary Data Architecture

The French AI Commission's consistent theme: "Those who control data control AI." Every national strategy emphasizes that data is the long-term strategic asset, not model access.

Your equivalent: clear understanding of what data you're sending to providers, what learning rights you're granting, and what proprietary data assets you're building that create defensibility regardless of which models you use.

Your moat is what you know that models don't. Architecture should protect and compound that advantage.

Capability Stacking → Decision Intelligence Layer

India's NITI Aayog strategy doesn't aim to build better foundation models than OpenAI. It aims to build capabilities on top of whatever models are available—capabilities tailored to Indian needs that create value regardless of which underlying models power them.

Your equivalent: the intelligence layer you build on top of models. Not just "we use GPT-4 for summarization" but "we have proprietary decision logic that uses model capabilities as inputs to judgments that reflect our domain expertise."

This is the difference between AI features and AI transformation. Features use models. Transformation builds intelligence that happens to use models as components. This is also why the dependency channels we examined earlier—compute, model updates, and telemetry—matter so much: they determine whether your intelligence layer remains yours.

The "Strategic Patience" Insight

National strategies reveal something product teams often miss: sovereignty architecture has a timeline measured in years, not sprints.

France's arc spans seven years. 2018: Villani Report diagnosed the "digital colonies" problem. 2024: €25 billion commitment. 2025: €109 billion infrastructure buildout. They didn't react to a crisis—they saw a pattern and executed against it systematically.

India's NITI Aayog strategy explicitly references 5-10 year horizons. Singapore's NAIS 2.0 shifted framing from "opportunity" to "necessity"—a multi-year evolution in how they think about AI's role.

Meanwhile, most product teams plan in quarters.

Why This Matters for Your Roadmap

The decisions you make this quarter constrain your options for years.

AWS dependency took 3-5 years to become painful. Companies that went "all-in" in 2015 spent 2020-2023 on expensive repatriation projects. Those who built abstraction layers early had dramatically easier transitions.

AI dependency is compressing that timeline. The integration depth, the prompt engineering investment, the fine-tuning, the organizational learning—all create switching costs that accumulate faster than cloud ever did. This is the compounding effect that makes the Triple Squeeze inescapable—each response to one threat accelerates the others.

If you wait until dependency is painful to address it, you're already years behind.

The Late-Mover Advantage

India's strategic frame offers a different perspective: "Adapting and innovating the technology for India's unique needs and opportunities would help it in leap frogging, while simultaneously building the foundational R&D capability."

You don't need to pioneer. You need to architect strategically.

The companies that will win aren't necessarily the ones who shipped AI features first. They're the ones who learned from early patterns and built architecture that compounds rather than constrains.

If you're not first to market with AI features, you can still be first to market with AI architecture that preserves optionality. That's a different kind of advantage—one that becomes more valuable as the market matures.

Quick Wins You Can Implement This Quarter

Strategic patience doesn't mean waiting. It means prioritizing architectural decisions while you still have options.

These changes take weeks, not quarters. They create disproportionate value.

Quick Win 1: Model Abstraction Layer

Investment: 2-4 weeks of engineering

Value: Foundation for provider switching

Define interfaces that are provider-agnostic. Your application code calls your abstraction; your abstraction calls specific providers. When you need to switch—or A/B test, or add fallbacks—you change the abstraction layer, not your entire codebase.

This isn't complex. It's interface design. But most teams skip it because the first provider works fine. Then they're locked in.

Quick Win 2: Data Flow Audit

Investment: Days of analysis

Value: Visibility into what you're sharing

Document what data goes where. What's sent to your AI provider? What learning rights have you granted? What proprietary data is being used to train systems you don't control? The Mixpanel breach showed how data flows through vendor ecosystems you never evaluated—your architecture inherits every weakness in that chain.

Most companies can't answer these questions today. You can't make informed architecture decisions without this visibility.

Quick Win 3: Terms of Service Review

Investment: Hours of reading

Value: Understanding your actual contractual position

Read what you signed. Note provisions around training data usage, competitive restrictions, pricing change mechanisms, and data retention.

When OpenAI or Anthropic or Microsoft updates terms, you'll know whether it affects you. Right now, most product leaders don't.

Quick Win 4: Optionality Assessment

Investment: Team discussion

Value: Honest view of your switching capability

For each AI feature, answer: How long to switch providers? Hours? Days? Quarters?

Features that could switch in days give you optionality. Features that would take quarters mean you've already made your choice—you just haven't acknowledged it.

Quick Win 5: Dependency Dashboard

Investment: Basic tracking setup

Value: Ongoing visibility into concentration

Track what percentage of AI features depend on your primary provider. Track how that percentage changes over time. Track the estimated switching cost.

What gets measured gets managed. Most companies don't measure AI dependency. They should.

Need Help Implementing These Quick Wins?Need Help Implementing These Quick Wins?

Get expert guidance on abstraction layers, data flow governance, and multi-provider architecture. 30 minutes to map your path from dependency to sovereignty.

The Governments Did the Strategic Thinking

France spent millions analyzing AI dependency. Singapore commissioned expert analysis of compute constraints. India developed sophisticated frameworks for late-mover advantage. The UK evaluated governance options extensively.

Their conclusions are published. Their frameworks are documented. Their strategic reasoning is available.

The least you can do is read them—and recognize that the patterns they identified apply to your product architecture at exactly the same logical level, just different scale.

If Singapore's government, with all their resources, concluded they couldn't build sovereign compute and needed to pursue "secure access" instead—what makes you confident your product team has fully evaluated your options?

But there's one constraint that even the most sophisticated national strategies underweight. The compute, talent, and policy stability required to maintain optionality don't scale with demand. The window for architectural decisions is closing faster than most realize.

That's what we'll examine in The Constraint Nobody's Modeling.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Constraint Nobody's Modeling

The State of SaaS in the Age of AIThe Constraint Nobody's ModelingEvery AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for Allies

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for AlliesOpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

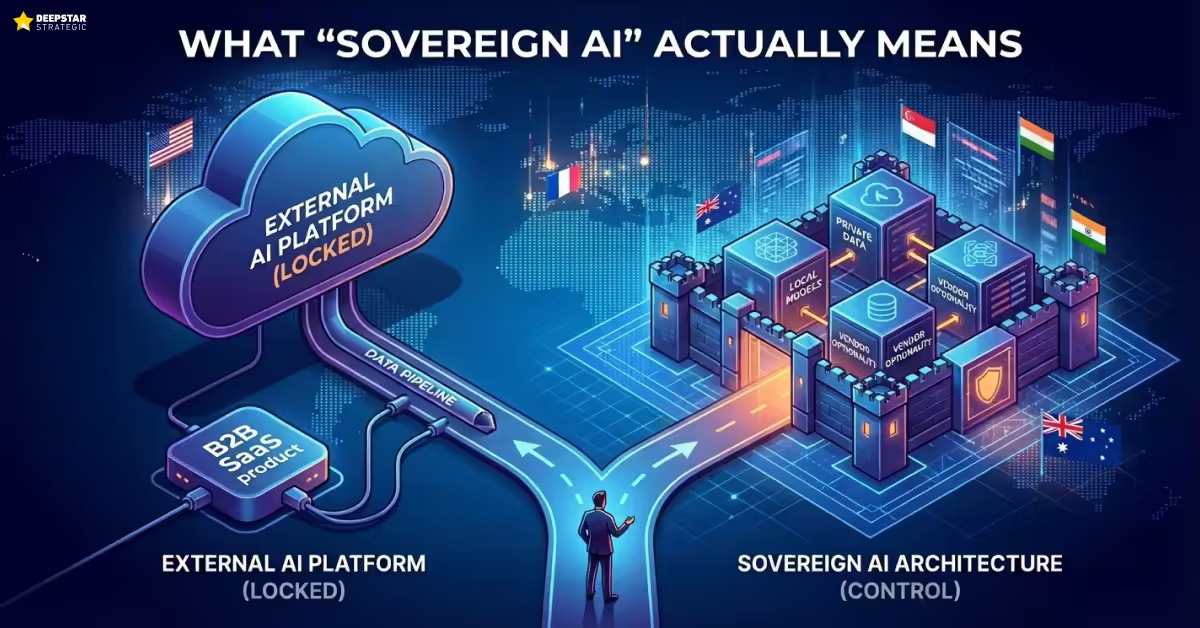

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product Strategy

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product StrategyFrance is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited Risk

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited RiskOpenAI says the Mixpanel breach wasn't their breach. Technically correct. Strategically irrelevant. Your architecture inherits every weakness in your vendor's supply chain.

Andrew Hatfield

Andrew HatfieldNovember 27, 2025