The Constraint Nobody's Modeling

Every AI sovereignty strategy makes the same assumption: when you're ready to build independence, the resources will be available. Analyze the economics and you'll find that assumption is wrong. Compute is concentrating. Talent is concentrating. Policy is unstable. The window is closing.

Andrew Hatfield

December 9, 2025

This is Part 4 of the AI Sovereignty series. Start there for the strategic foundation.

Every national AI strategy makes the same assumption. Every SaaS company's "we'll figure out AI architecture later" plan makes it too.

The assumption: when you're ready to build AI sovereignty—when you've finished the current roadmap, secured the budget, hired the team—the compute, talent, and stable policy environment will be available.

That assumption is wrong. And the window for correcting it is closing.

Compute is concentrating. Talent is concentrating. Policy is unstable. Architectural decisions compound in ways that close options faster than new ones open. The question isn't whether to pursue sovereignty. It's whether you'll still be able to when you finally decide you need it.

The Compute Constraint

AI compute doesn't follow software scaling curves. Data centers require physical construction. Power infrastructure requires grid upgrades. GPU manufacturing has finite capacity. These are atoms, not bits.

The Stargate project illustrates where the capacity is going. $500 billion committed over four years. 7 gigawatts of planned capacity—roughly the output of seven nuclear reactors dedicated to AI compute. First site operational in Abilene, Texas. Five additional sites announced across Texas, New Mexico, and Ohio.

According to the Financial Times, OpenAI intends to be the customer for all of this capacity—which it needs to train and run its AI models.

That's not neutral capacity being added to the market. That's captive infrastructure serving a single provider's interests.

France understood this constraint. Their €109 billion investment, spread across 35 datacenter sites, is fundamentally about ensuring they have access to compute capacity that isn't subject to someone else's allocation decisions. They're building because they can't assume compute will be available when they want it—the strategic sovereignty that separates real independence from geographic residency.

Singapore understood it differently. Their National AI Strategy 2.0 states it plainly:

"GPUs are in short supply, and we face intense global competition to access them."

Singapore is a wealthy, sophisticated country with strong relationships across the technology landscape. They looked at the compute market and concluded they cannot build sovereign infrastructure at scale. The best they can do is "secure access"—strategic partnerships that give them priority but not control.

If Singapore can't assume compute availability, why would your product team?

The Economic Instability Either Way

Here's what makes the compute constraint particularly unstable: even the economics of hyperscaler investment are being questioned.

IBM CEO Arvind Krishna recently argued that hyperscaler AI investments may never generate adequate returns. His math: building one gigawatt of data center capacity costs roughly $80 billion. If hyperscalers collectively add 100 gigawatts, that's $8 trillion in capital expenditure—requiring approximately $800 billion in annual profit just to cover interest costs. And the hardware becomes obsolete every five years.

"It's my view that there's no way you're going to get a return on that."

— Arvind Krishna, IBM CEO

Whether Krishna is right or wrong, the implication for companies building on these platforms is the same.

If he's right, expect pricing pressure as hyperscalers chase profitability. API costs that seem stable today could increase dramatically as providers seek returns on trillion-dollar investments. The "cheap inference forever" assumption underlying many product strategies would break.

We've seen this before. In the cloud era, conventional wisdom held that prices would decrease indefinitely as infrastructure scaled. I argued this was obvious nonsense—eventually you hit a floor you can't sustain, and once you've established market dominance you no longer need to buy the market. You can monetize it instead.

That's exactly what happened. Dropbox's 2016 repatriation—which saved them $75 million over two years—was an early signal that cloud economics weren't trending where vendors promised. AWS operating margins improved as they scaled, reaching 38% in 2024—their highest in at least a decade. The efficiency gains went to shareholders, not customers. And the architecture made exit expensive by design: cheap to move data in, costly to move it out.

AI inference pricing is following the same pattern, compressed. The current rates reflect market-capture economics, not sustainable margins. And the dependency architecture is familiar—easy API integration, but try extracting your fine-tuning investments, your prompt libraries, your organizational learning around specific model behaviors.

If he's wrong—if hyperscalers do find ways to monetize these investments—expect aggressive consolidation. Platforms with $8 trillion in infrastructure will need to capture more value from the ecosystem to justify returns. That means more native features competing with your product, more aggressive terms, more pressure to lock in customers.

Either scenario increases dependency risk. The compute infrastructure you're building on is either economically unstable or controlled by entities with massive incentive to extract value from their position. This is the platform consolidation pattern playing out in real-time—and each cycle compresses faster than the last.

What This Means for Architecture Decisions

Waiting to build AI independence means competing for constrained resources against better-capitalized players.

The hyperscalers are investing hundreds of billions. The AI labs are consuming capacity as fast as it comes online. Nation-states with sovereign ambitions are reserving allocation.

If your strategy requires significant compute capacity for self-hosted models or custom training, that capacity may not be available at the price—or timeline—you're assuming. The longer you wait, the more constrained the market becomes.

The companies that secured cloud infrastructure early in the AWS era had options that latecomers didn't. The same dynamic is playing out in AI compute, compressed into a shorter timeline.

The Talent Constraint

AI engineering talent capable of building sovereignty architecture is scarce. And it's concentrating in places that aren't your company.

OpenAI, Anthropic, Google DeepMind, Meta AI—the major labs are absorbing talent at rates that reshape the market. Compensation packages at frontier AI companies have disconnected from normal tech salary bands. The engineers who understand model architecture, training pipelines, and inference optimization at production scale are being hired by the platforms you'd be building independence from.

This creates a compounding problem. The labs get the best talent. The best talent builds the most capable systems. The most capable systems attract more users. More users generate more revenue. More revenue funds higher compensation. Higher compensation attracts more talent.

The cycle concentrates expertise in a small number of organizations.

The "We'll Hire When We're Ready" Fallacy

If your AI sovereignty strategy depends on hiring expertise when you need it, you're depending on a market that's tightening, not loosening.

The talent constraint isn't just about compensation—though that's real. It's about the pipeline. The number of engineers with production AI experience is finite. PhD programs produce researchers, but translating research into production systems requires additional years of experience that most engineers don't yet have.

The pattern mirrors what happened with cloud architecture expertise in 2010-2015. Companies that built cloud-native teams early accumulated years of institutional knowledge. Companies that waited found themselves competing for scarce talent while trying to catch up on fundamentals their competitors had already mastered.

By 2020, the gap between cloud-mature and cloud-lagging organizations was measured in years of capability difference—and that gap proved difficult to close regardless of hiring investment.

The same dynamic is playing out in AI, faster.

The Institutional Knowledge Problem

Even if you can hire AI expertise, building institutional knowledge takes time.

Understanding your specific data, your specific use cases, your specific quality requirements—that knowledge doesn't transfer from previous employers. It develops through iteration with your systems.

A team hired today needs 12-24 months to develop deep familiarity with your context. A team hired in 2027 needs the same ramp time but starts further behind the curve.

The organizations building AI capability now will have years of compounded learning by the time latecomers begin. AI-native startups are already achieving 100% growth while traditional SaaS stalls at 23%—a 4.3x performance advantage that compounds with every quarter of architectural lead.

The Policy Instability Constraint

This is the constraint that most strategies ignore entirely: the regulatory environment for AI is unstable in ways that make long-term planning unreliable.

US AI policy reversed 180 degrees in hours.

Biden's Executive Order 14110, issued October 2023, established comprehensive safety requirements, created the AI Safety Institute, mandated equity considerations, and included worker protections. It represented one coherent vision for AI governance.

Trump's administration revoked it on January 20, 2025. Day one. The entire framework disappeared.

The replacement—the July 2025 AI Action Plan—embodies a fundamentally different philosophy. Where Biden emphasized safety and guardrails, Trump emphasizes acceleration and deregulation. Where Biden created the AI Safety Institute, Trump rebranded it as the Center for AI Standards and Innovation, literally removing "Safety" from the name. Where Biden's NIST framework included considerations for misinformation, equity, and climate, Trump's directive orders those references eliminated.

I'm not arguing which approach is correct. That's politics. What matters for architecture decisions is that the policy environment you build on can reverse completely—and has.

But here's what matters for your roadmap: the policy environment you build on can reverse completely with an election. And it could reverse again in four years. Or sooner if political conditions shift.

The State-Level Complication

The federal regulatory vacuum isn't creating clarity—it's creating fragmentation.

California has advanced AI regulations. Colorado has enacted AI governance requirements. Other states are developing their own frameworks. A patchwork of inconsistent state requirements may prove more constraining than unified federal regulation would have been.

While the federal government may slow regulatory enforcement, states are likely to fill the void.

If your AI architecture assumes stable policy—in either direction—you're assuming something that demonstrably doesn't exist.

Architecture as the Only Stable Ground

When policy can shift unpredictably, the only defensible position is architecture that can adapt to whatever environment emerges.

An abstraction layer that lets you swap model providers also lets you respond to regulatory changes. Multi-provider capability isn't just risk management for vendor terms—it's risk management for policy shifts. Clear data governance isn't just good practice—it's preparation for compliance requirements that may emerge.

The companies that navigate the next decade successfully won't be the ones who bet correctly on which regulatory regime prevails. They'll be the ones who built architecture flexible enough to operate under multiple regimes.

The Compounding Effect

Each of these constraints would be manageable in isolation. Together, they compound.

Limited compute means you can't just build your way out of dependency when you decide to. Scarce talent means you can't just hire expertise when you need it. Policy instability means you can't just wait for clarity before committing.

And meanwhile, every sprint you ship without sovereignty architecture deepens your lock-in.

Every feature integrated with your primary AI provider increases switching costs. Every prompt optimization tuned to specific model behavior creates migration friction. Every workflow your organization builds around current tools creates institutional dependency. Every month of accumulated usage data trains systems you don't control. These are the dependency channels compounding against you.

The decisions you defer aren't waiting. They're compounding against you.

AWS dependency took 3-5 years to become painful. By year five, repatriation projects cost billions and took years to execute. Companies that built abstraction layers in year one had fundamentally different options than those who waited until year five. And as your customers gain access to the same AI tools you use, they're building "good enough" replacements in weekends—validating that your workflows are simpler than your pricing suggests.

AI dependency is compressing that timeline. The integration is deeper. The data flows are more sensitive. The learning effects are more pronounced. What took five years with cloud infrastructure is happening in 18-24 months with AI.

If you're waiting to address architecture until dependency becomes painful, you're already behind.

What This Means for Your Next Quarter

The constraint reality doesn't mean panic. It means prioritizing architectural decisions while you still have options.

The Three Decisions That Can't Wait

1. Abstraction Layer Implementation

Every sprint without a provider-agnostic abstraction layer deepens lock-in. The engineering investment is weeks. The optionality it creates lasts years.

This is the foundation for everything else. You can't switch providers without it. You can't test alternatives without it. You can't respond to changing conditions without it.

Build this now. Not next quarter. Now.

2. Data Flow Governance

Understanding what data goes where is prerequisite for any sovereignty discussion. If you can't answer "what are we sending to our AI provider?" you can't evaluate your options.

Audit your data flows. Document what's shared. Understand what learning rights you've granted. This takes days of analysis, not months of engineering. There's no reason not to know.

3. Multi-Provider Capability

Even basic ability to route requests to alternative providers changes your strategic position. You don't need to use alternatives in production—you need to be able to.

Test Anthropic if you're on OpenAI. Test open-source models for non-critical features. Build the routing capability even if you don't use it. Having the option is different from not having it.

The Three That Can Follow

1. Full Model Self-Hosting

Significant investment. Requires infrastructure, expertise, and ongoing operational capability. Important for competitive crown jewels, but builds on top of abstraction layer and data governance.

Do this after the foundation is solid, not before.

2. Complete Data Sovereignty Architecture

The comprehensive approach to ensuring your data creates advantage only for you. Valuable but complex. Builds on governance foundation.

Plan this carefully rather than rushing it.

3. Custom Model Training

Fine-tuning or training models on your proprietary data. Creates genuine differentiation but requires the other capabilities first.

A goal, not a starting point.

The Window Is Closing

Nations spending billions understand these constraints. They're acting now because waiting makes the problem harder, not easier.

France's €109 billion investment isn't a reaction to crisis—it's a recognition that the window for building sovereign capacity is finite. They diagnosed the problem in the 2018 Villani Report and executed against it systematically because they understood that delay would close options.

Singapore's explicit acknowledgment of compute scarcity isn't pessimism—it's realism. They're pursuing "secure access" because they analyzed the market and concluded that independent capacity isn't achievable for them at scale.

India's "late-mover advantage" thesis isn't passivity—it's strategy. They're building capability while learning from others' patterns, recognizing that the window for strategic architecture remains open even if the window for pioneering infrastructure has passed.

Your company faces the same constraints at different scale.

Compute is concentrating in captive infrastructure. Talent is concentrating at the platforms you'd be building independence from. Policy is unstable in ways that make long-term planning unreliable. And architectural decisions compound faster than most teams recognize.

The question isn't whether you'll pursue sovereignty eventually. It's whether you'll have the options to pursue it when you finally decide you need it.

In 24 months, will you be explaining to your board why you preserved strategic optionality when it was available?

Or why options that existed today no longer exist?

This series has examined how national AI sovereignty strategies reveal architectural principles for SaaS companies—from what sovereignty actually means, to the dependency architecture being built for allies, to the six strategic principles that translate to product decisions, to the constraints that make timing critical.

The question now is implementation: how do you move from dependency to decision intelligence without sacrificing delivery velocity?

That's the subject of From AI Features to AI Transformation—the methodology for building AI architecture that creates competitive advantage rather than platform dependency.

Let's Discuss Your AI StrategyLet's Discuss Your AI Strategy

30-minute discovery call to explore your AI transformation challenges, competitive positioning, and strategic priorities. No pressure—just a conversation about whether platform independence makes sense for your business.

Dive deeper into the state of SaaS in the age of AI

Understand the market forces reshaping SaaS—from platform consolidation to AI-native competition

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for Allies

The State of SaaS in the Age of AIThe Dependency Architecture Being Built for AlliesOpenAI's 'sovereign deployment options' sound like independence. The US government's AI Action Plan describes these as partnerships. Read the actual documents and you'll find explicit language about 'exporting American AI to allies.' That's not a sovereignty framework—it's a dependency architecture with better PR.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS Companies

The State of SaaS in the Age of AIWhat National AI Strategy Teaches SaaS CompaniesFrance, Singapore, India, and the UK collectively spent tens of millions developing AI sovereignty strategies. The conclusions are public. They translate directly to your product architecture decisions. Most SaaS product teams haven't read a single one.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

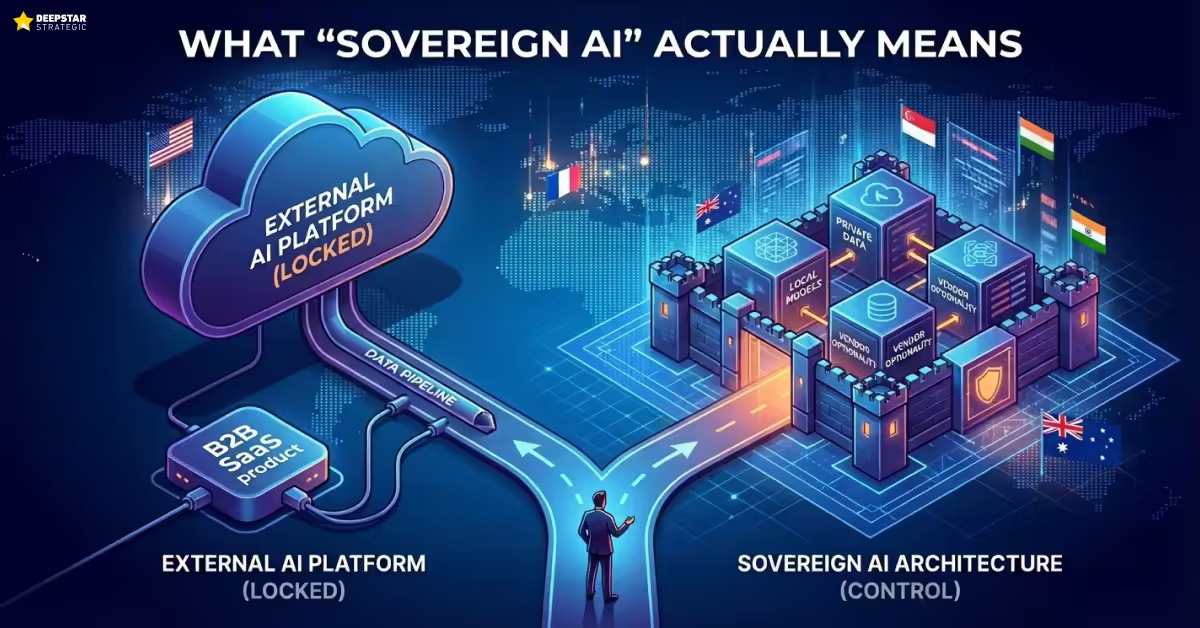

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product Strategy

The State of SaaS in the Age of AIWhat 'Sovereign AI' Actually Means for Your Product StrategyFrance is spending €109 billion on AI infrastructure. Singapore admits GPUs are in short supply and they face 'intense global competition' to access them. Your product team is debating whether to increase OpenAI API spend by 20%. These aren't different conversations—they're the same conversation at different scales.

Andrew Hatfield

Andrew HatfieldDecember 9, 2025

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited Risk

The State of SaaS in the Age of AIThe Security Cost of Platform Dependency: What the OpenAI/Mixpanel Breach Reveals About Inherited RiskOpenAI says the Mixpanel breach wasn't their breach. Technically correct. Strategically irrelevant. Your architecture inherits every weakness in your vendor's supply chain.

Andrew Hatfield

Andrew HatfieldNovember 27, 2025